Presentation

Assessing Your Organization’s Digital Asset Management Needs

27 August 2025

Choosing a Digital Asset Management (DAM) system is a high-stakes process, but it can also be energizing and collaborative when done right. Whether you’re replacing a legacy system or starting from scratch, the first step is understanding what people need. This means listening carefully, mapping what’s working and what’s not, and building shared enthusiasm for what a DAM can unlock.

Start with People

The foundation of this work is people. Find them, talk with them, and the rest will start to fall into place. Before you dive into features or vendors, start with people. A DAM system’s success depends on the constituency that uses and supports it, so identifying and engaging the right voices is essential.

Who makes or uses the digital assets?

Think broadly about anyone who creates, manages, approves, uses, or delivers digital assets. That might include:

- Content creators, designers, and editors

- Marketing and communications teams

- Archivists and records managers

- Product or project managers

- IT and security staff

- Legal, compliance, and risk officers

- Executive sponsors and decision-makers

- Funders or departments responsible for system costs

To identify the right stakeholders, ask:

- Who touches assets from creation through to delivery and preservation?

- Who makes decisions about DAM staffing, training, and long-term support?

- How is the DAM currently funded—or how will it be funded in the future?

Start broad. As you engage people across roles and departments, a smaller group will naturally emerge with deeper involvement, insight, and decision-making responsibility. These are your core stakeholders—the people who will help shape the system and carry it forward.

Listen for Insights

Stakeholder input isn’t just helpful—it’s essential. These conversations shape your goals, expose pain points, and clarify what your DAM needs to support. Engaging the right people early gives you a clearer view of how assets are really managed—and where the friction lives.

As you talk with them, don’t just focus on workflows. Ask about long-term support: Who will own the DAM? Is IT prepared to manage integrations, infrastructure, and security? Is there funding or staffing available to maintain governance, training, and standards? These questions are just as important as functional needs and should guide your assessment from the start.

Start with short, focused interviews. Skip surveys, which often yield surface-level feedback. Instead, speak one-on-one or in small groups. Record conversations (with permission) so you can revisit the details. Ask open-ended, practical questions like:

- What tools do you use to create, manage, or find digital assets?

- What do you wish were easier?

- What already works well?

- How do you handle rights or metadata?

- What slows you down or creates confusion?

- If you had a magic wand, what functionality would you ask for?

Pay attention to the language people use. It’s invaluable when you begin writing requirements or explaining priorities to vendors.

Organize and Prioritize What You’ve Learned

Once you’ve gathered enough feedback, use a simple rubric to organize and prioritize what you’ve learned. This helps you spot patterns, identify gaps, and guide planning. Assessment isn’t just a step toward a decision. It’s how you learn what success will require. It helps you see not only what’s broken but what’s working, what people hope for, and what you’ll need to prioritize.

Your assessment should help you answer:

- Where are the friction points in your asset lifecycle?

- What are the root causes of confusion, delays, or errors?

- What already works well and could be scaled?

- What would help your teams collaborate better or move faster?

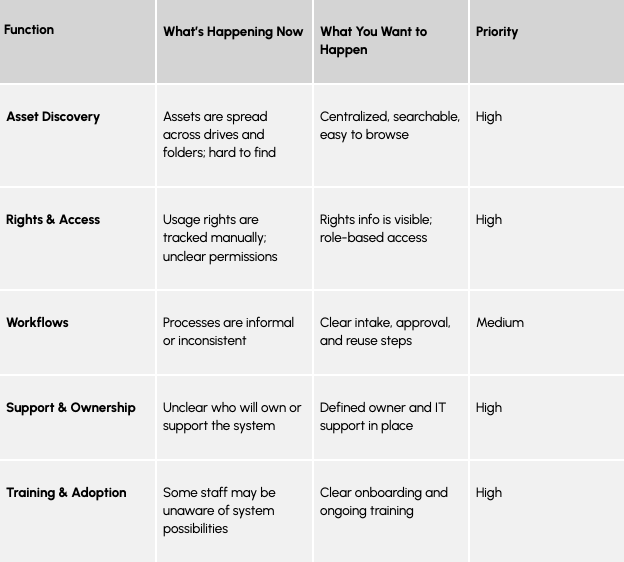

A useful way to organize this thinking is with a simple rubric:

This high-level rubric helps turn qualitative insights into a shared understanding of your current landscape. It can surface high-impact gaps, clarify priorities, and serve as a foundation for your implementation roadmap or RFP.

Keep Listening, Keep Refining

At the heart of a successful DAM assessment are people—the users, decision-makers, and behind-the-scenes teams who rely on digital assets every day. Their insights are the source of your best ideas.

Information gathering isn’t a one-time process. Keep asking questions. Keep listening. As your understanding deepens, your priorities will evolve, and your system requirements will sharpen. The more inclusive and user-driven your approach, the more likely you are to select a DAM that meets your real needs and earns long-term support.

What may feel like a jumble of tools, frustrations, and hopes now will eventually turn into clear priorities, confident decisions, and, most importantly, a system that fits the way your organization actually works.

Focus the Vision for Your Digital Assets

With input from your stakeholders in hand, it’s time to define a shared vision for what the DAM is meant to accomplish. That vision comes to life through clear, outcome-driven business objectives. These objectives articulate the why behind the DAM: why it matters, what it will change, and how you’ll know it’s working.

Business objectives help you prioritize features, align teams, and communicate the system’s value to leadership. They keep the project focused, especially when you’re evaluating trade-offs or making decisions down the line.

Before diving into detailed requirements, ask: What does success look like with a digital asset management system in place? Are you aiming to reduce legal risk? Speed up campaign delivery? Preserve institutional knowledge? These goals shape every step of your selection and implementation process and are communicated through business objectives.

A strong business objective answers:

“What are we trying to improve, fix, or enable with this system?”

Sample Business Objectives:

- Reduce time spent searching for assets by 50% to support faster content delivery Teams currently spend significant time locating approved visuals and files. Reducing this friction will help meet tight publishing timelines and improve responsiveness.

- Ensure only licensed assets are used in public materials Inconsistent tracking of usage rights increases legal and reputational risk. A DAM should help enforce compliance and make rights information visible and actionable.

- Consolidate digital assets created by different users and stored in disparate systems Assets are currently spread across local drives, cloud folders, and legacy tools. Centralizing them will support discoverability, collaboration, and long-term access.

Whenever possible, tie business objectives to measurable outcomes. For example: “Reduce asset search time by 50%” or “Ensure 100% of publicly used assets have visible rights metadata.” These goals can help you evaluate vendors—and later, your DAM’s performance.

Is a New Digital Asset Management System Actually Needed?

One of the goals of a good assessment is clarity. Sometimes that clarity reveals that a new DAM isn’t the right next step. You might discover that your existing system could work with better training, governance, or configuration. Or you may find that the real issue isn’t the technology, but the lack of shared standards or ownership. That’s still progress. A thoughtful assessment can help you solve the right problems, whether or not that includes replacing your DAM.

Next Steps: From Insight to Action

Whether your assessment points to the need for a new DAM or uncovers ways to improve the one you already have, the outcome is the same: you now know what you didn’t know before. It’s time to turn that insight into a plan.

Start by organizing what you’ve learned into something clear and shareable. A spreadsheet, a shared doc, or whatever helps your team keep track of it all. Consolidate data and priorities in one place to prepare for internal planning, vendor conversations, or decision-making.

As you move toward system selection or renewal, take a beat to assess your organizational readiness:

- Who will own the DAM long-term?

- Is IT prepared to support infrastructure, integrations, and identity management?

- Do you have staff or governance in place to manage the operation of the system?

A successful digital asset management system depends on more than just features. It also needs committed people, long-term support, and a structure that can grow with your organization. As you talk with stakeholders and gather input, make sure to document what you’re learning—key themes, priorities, pain points, and goals. Documenting these early insights will help shape shared understanding and keep things grounded as you move into planning and decision-making.

Selecting the Perfect DAM for Your Organization

22 August 2024

Choosing the right Digital Asset Management (DAM) system can feel overwhelming, especially with the myriad of options and the complexities involved in the selection process. If you’re like many organizations, you may find yourself questioning your choices, feeling uncertain about your requirements, or unsure of how to navigate the procurement landscape. This guide dives deep into the essential steps and considerations for selecting the ideal DAM system for your organization.

Understanding Your Needs

The first step in selecting a DAM solution is understanding your organization’s specific needs. This process involves more than just listing features; it requires a comprehensive assessment of your current assets, workflows, and user requirements.

- Stakeholder Engagement: Engage with different teams to gather insights about their needs and pain points. This ensures that the selected system will cater to the diverse requirements of all users.

- Discovery Process: Conduct interviews and surveys to identify what users expect from the DAM system. This step is crucial as it uncovers needs that might not be immediately obvious.

- Documentation: Document all findings in a clear manner. This will serve as a reference throughout the selection process.

The Importance of a Structured RFP Process

A well-structured Request for Proposal (RFP) is vital in the DAM selection process. It not only communicates your needs to potential vendors but also sets the tone for how they will respond.

- Clarity in Requirements: Clearly outline your requirements using user stories or scenarios. This helps vendors understand the context behind your needs.

- Prioritization: Prioritize your requirements into mandatory, preferred, and nice-to-have categories. This helps vendors focus on what’s most important to your organization.

- Engagement: Allow stakeholders to participate in the RFP process. Their involvement increases the likelihood of buy-in and adoption later on.

Common Pitfalls in DAM Selection

Many organizations fall into common traps when selecting a DAM system. Avoiding these pitfalls can save you time and money.

- Ignoring User Needs: Skipping the discovery process can lead to selecting a system that does not meet the actual needs of users.

- Over-Reliance on Recommendations: Choosing a system based solely on a colleague’s recommendation can be misleading. What works for one organization may not work for another.

- Underestimating Costs: Focusing only on the initial purchase price without considering implementation, training, and ongoing costs can lead to budget overruns.

Evaluating Vendor Responses

Once you’ve sent out your RFP, the next step is to evaluate the responses from vendors. This involves more than just looking at prices; it requires a thorough analysis of how each vendor meets your specific needs.

- Scoring System: Develop a scoring system to compare vendor responses based on how well they meet your requirements. This allows for an apples-to-apples comparison.

- Demos: Schedule vendor demos focused on your specific use cases. This helps you see how the system performs in real-world scenarios relevant to your organization.

- Qualitative Feedback: Collect feedback from stakeholders who attend the demos to gauge their impressions and preferences.

Understanding Customization and Configuration

Many organizations grapple with the concepts of customization and configuration during the DAM selection process. Understanding the difference is crucial.

- Configuration: This involves setting up the system using available features without altering the underlying code. It’s generally easier and cheaper to implement.

- Customization: This entails modifying the software to meet specific needs, which can be more complex and costly. Be sure to inquire about the implications of customization during vendor discussions.

Managing the Implementation Timeline

Timing is everything in the DAM selection process. Many organizations underestimate how long it takes to select and implement a new system.

- Anticipate Delays: Factor in time for vendor responses, stakeholder feedback, and potential procurement delays.

- Implementation Timeline: A typical DAM selection process can take several months, so start early to avoid rushed decisions.

- Post-Selection Support: Ensure that you have a plan for training and onboarding users once the system is selected.

Conclusion: Making the Right Choice

Selecting the right DAM system is a significant decision that can impact your organization for years to come. By following a structured process, engaging stakeholders, and carefully evaluating options, you can make an informed choice that meets your organization’s needs. Remember, the goal is not just to choose a system, but to select a solution that enhances your workflows and improves the management of your digital assets.

For more resources on DAM selection, including checklists and guides, visit AVP’s Free Resources.

Transcript

Chris Lacinak: 00:00

Amy Rudersdorf, welcome to the DAM right podcast.

Amy Rudersdorf: 02:15

Yeah, thanks for the opportunity.

Chris Lacinak: 02:17

I’m really excited to be here. So for folks that don’t know, you are the Director of Consulting Operations at AVP. And I’ve asked you to come on today because you’ve just written a piece called Creating a Successful Dam RFP, and you’ve included with it a bunch of really useful handouts. And so I wanted to just dive into that and have our listeners better understand what the process is, what the value of it is, why it’s important, what happens if you don’t do it, so on and so forth. But I’d love to just start, if you could tell us, what is the expertise and experience and background that you bring to this topic?

Amy Rudersdorf: 02:54

Sure. So before I came to AVP, I was working in government and academic institutions where we had to go through a procurement process to buy large technologies. And so I’ve seen this process from the client side. I know what the challenges are. I know that this can be a really time-consuming process and really challenging if you don’t know how to do it. And then when I came to AVP, I had the opportunity to help guide clients through this process. And over the years, we’ve really refined what I think is a great workflow for ensuring that our clients get the right technology that they need.

Chris Lacinak: 03:33

And you’ve been doing this for years, as you say. So I’m curious, why now? What inspired you to write this piece after refining this for so many years? Why is now a good time to do it?

Amy Rudersdorf: 03:44

I think the main… Well, there are a couple of reasons, but one of them is that there’s just been in the last couple of years a proliferation of systems. There are hundreds of systems out there that we call DAM or MAM or PIM or PAM or digital preservation. There’s all kinds of systems. From a pricing standpoint, DAMS range from as low as $100 a month to six figures annually. And the market is really catering to a diverse set of needs from B2B to cultural heritage to Martech, and then your general purpose asset management systems. And I’ve seen organizations recognize that it’s really important to do it right. They want to make sure that when they acquire technology, it’s something that’s going to work for their institution for the long term. But they really struggle with how to do it. So what I hope through this piece is that I can help individuals and organizations with this step-by-step guide to successfully procure their own technology without us, and maybe in addition, see the value of working with an organization like AVP.

Chris Lacinak: 05:00

And how would you describe who this piece and these checklists are for?

Amy Rudersdorf: 05:06

Well, I would say specifically, they’re for organizations looking to procure a DAM. And this could be your first DAM or moving from a DAM to an enterprise DAM technology or MAM. So that’s the specific audience. But really, if someone’s looking to procure a technology, the process is going to be very similar. And so many of these checklists will be useful to those folks as well.

Chris Lacinak: 05:38

Yeah, I think it is important. You’ve kind of touched a couple times on, you know, the piece is called, or calls out specifically DAM. As you mentioned, and it’s worth reiterating, we’ve talked about it here on the podcast before, but we use a very broad interpretation of DAM to include things like you mentioned MAM, PIM, PAM, digital preservation, so on and so forth. So it’s good to know that folks looking for any of those technologies in the broader category of DAM that this is useful for. For someone out there considering procuring a DAM and thinking, you know, we don’t need an RFP process or we don’t need to use this complex, time-consuming process, is it still useful for them? Or are they things that they can grab out of this piece, even if they don’t want to go through the full process?

Amy Rudersdorf: 06:32

Well, my initial response to this question is, you should be considering the RFP process. And if not a full RFP process, at least an RFI, which is a request for information as opposed to a request for proposals. The RFI is a much more lightweight approach. But in either case, I feel like this document, this set of checklists is useful for anyone thinking about getting a DAM. Because the checklists step you through not just how to write an RFP, but also how to gather the information you need to communicate to vendors. So if you look at checklist number two, for instance, it really focuses on discovery and how to undertake the stakeholder engagement process, which you’ll want to do whether or not you’re writing an RFP. You really need to understand your user needs before you set out to identify systems that you might want to procure.

Chris Lacinak: 07:39

Yeah, that’s a good point. And maybe it’s worth saying that for smaller organizations maybe that aren’t required to use an RFP process, that what you’ve put down here, when I look at it, I think of it’s kind of the essential elements of an RFP, right? You might give this to an organization that then wraps a bunch of bureaucratic contractual language around it and things like that. But this is the essence of a organization-centered or user-centered approach to finding an RFP or finding a DAM that fits. So let’s talk about what are the pitfalls that people run into when they’re procuring a DAM system?

Amy Rudersdorf: 08:25

So I’ll start by saying, I think it’s really important that when you’re procuring a technology that you talk to your colleagues in the field and see what they’re using. But just, as much as that’s important, I will say that’s also a major pitfall if you do that, if that’s your only approach. Because you may have a colleague who uses a system they love, it does everything they need it to do, and they say to you, “Yeah, you should definitely buy this system.” But the reality is that that system works for them in their context and your context, your stakeholders are very different. And so that assumption is, I think, flawed. You have to go through a stakeholder engagement and discovery process where you’re talking to your users and finding out what they need, what their requirements are in order to communicate to vendors what it is that you need that system to do for you, as opposed to what it’s doing for your colleagues. I’ll say, Kara Van Malssen posted a LinkedIn post a few weeks ago, and it was really useful. It’s the eight worst ways to choose a DAM based on real world examples. And one of those is choose the system that your colleague recommends. And as she says, your organization’s use cases are totally different from theirs. I think there’s also the pitfall of, you go to a conference and you met a salesperson, they were really nice, the DAM looked great, it did everything that they said it could do. But when you’re at a conference, that salesperson is on their best behavior, and they’ve got a slick presentation to show you. So just approaching this with a multifaceted approach is going to be far more effective than just saying, my colleague likes it, or I saw it at a conference. You combine all of those things together as part of your research to find the system that works for you.

Chris Lacinak: 10:44

Yeah. And that makes me think of requirements and usage scenarios, which I want to dive into. But before we go there, I want to just ask a similar question, but with a different slant, which is, what’s the risk of not getting this right, of selecting the wrong DAM?

Amy Rudersdorf: 11:02

Yeah, so the risk is huge. I think DAMS are not cheap. I would say that’s the first thing. You do not want to purchase or sign a contract, which is typically multi-year, with a vendor for a system that doesn’t work for you. You will be miserable. And I think more importantly, your users will be miserable. And this will cause work stoppage, potentially loss of assets, and it could be a financial loss to the organization. Not doing this right will have repercussions all the way down the line for the organization, and you’ll be hurting for years to come.

Chris Lacinak: 12:00

Yeah, I think one thing we’ve seen is an organization, maybe they go out and they buy a cheap DAM, and maybe they think, “Well, you know what? It’s cheap. If it doesn’t work, we only spent, what, $15,000 or $20,000,” or whatever the case may be. Not realizing that that might be the cheapest part, right? Because you got to get organizational buy-in, you got to train people, you got to onboard them. And then it goes wrong, or it goes, you know. And we’ve seen this. We’ve come in on the heels of this. Where like, there’s a loss of trust. There’s poor morale. People don’t believe that it’s going to go right this time. So yeah, there’s a lot to lose there, and it’s more than just the cost of the DAM system, as you point out. So let’s jump back to requirements and usage scenarios. So you talk quite a bit about the importance of getting requirements and usage scenarios documented and getting them right. Could you just talk a bit about those two things, how they relate to each other, and then we’ll kind of dive in and I’ll ask you for some examples of each of those.

Amy Rudersdorf: 13:03

Okay. Well, this is where I’ll probably start to nerd out a little bit. But you’re going to, as the centerpiece of your RFP, communicate your needs. And when I say your, I mean your organizational needs for a new system. So you will be representing the needs of your stakeholders, if you’re doing it right. Their challenges or pain points, their wishlist, all of that needs to be communicated to vendors in a clear and concise manner that they can interpret appropriately and provide answers that are meaningful to you so that you can then analyze the responses in such a way that you can understand whether that system will work for your organization. So structuring your requirements and your usage scenarios, we call them usage scenarios at AVP, lots of people call them use cases, but structuring those correctly is going to be the part that gets you the responses you need in order to make a data-driven decision.

Chris Lacinak: 14:20

And I’ve heard you talk about before, I mean, to that point, I guess, we have seen RFPs in which the question that is posed is, can you do this thing to the vendor? And the vendor just simply has to check a yes or no box. To your point, I think from what I’ve seen from your work is like, you really get to how do you do this thing so that there’s much more information around it. So it sounds like structuring those, getting those right and structuring them in the right way is going to give you not a yes or no answer, which is often misleading and unhelpful and things, but like a much more nuanced answer.

Amy Rudersdorf: 14:56

I think the other part is, you want to help the vendor understand. You want to work with them to get the best outcome from this process. And so giving them as much context as you can is important too. And that’s why we structure our requirements the way we do so that the vendor sees what the need is, but also understands why we’re asking for it.

Chris Lacinak: 15:20

That’s a really good point. That is something that you hear vendors complain about with RFPs that they don’t provide enough information. And I want to ask you later about what ruffles the feathers of vendors, but let’s keep on the requirements and usage scenarios. So can I ask, you said most people call them use cases, AVP calls them usage scenarios. Why is that?

Amy Rudersdorf: 15:42

Well, a use case is just a standalone narrative of, it’s a step-by-step narrative of what the needs are for a system. So you’re telling a story about a user going through a process or a series of processes. A usage scenario offers context beyond that. So you provide some background information. Why is this usage scenario important? Well, you’re explaining that we’re asking you to respond to this because this is our problem. And so providing, again, it’s that context. So the vendor understands why you’re asking for something or why you need something. It just makes their answers better. They’re more informed. I think they feel more confident in their responses. And so it’s just a little bit more context around the use case than just a standalone use case.

Chris Lacinak: 16:38

What does a well-crafted requirement look like?

Amy Rudersdorf: 16:44

So at AVP, we use the user story structure, which comes out of the agile development process. It’s basically an informal sort of general explanation of a software feature that’s written from the perspective of an end user or a customer. And we call them personas. So as part of your user story, it’s a three-part structure. So as a persona, or the person that needs something to happen, I need to do something so that something is achieved. So a standard requirement is just a statement of a need. But here you can see there’s a real person. So this is user-driven content. There’s a real person who has a real need because something really needs to be achieved. And I think that structure is really powerful. I just said “really” a lot of times. But there are a lot of examples for how to build user stories on the web. And again, just giving that vendor as much context as possible. You have to think about you’re handing over to these vendors 20-page documents that they have to sift through to try to understand what your needs are and how to match them to their system. And so any background you can give them, any context you can give them, is just going to be a win for everyone. So it really will impact whether you’re seeing responses from the vendors that align with your needs or not. I think it provides clarity in the process that the standard requirement structure doesn’t offer.

Chris Lacinak: 18:31

Right. So I’ll go out on a limb here and venture a guess that a bad requirements list might be a list of bullet points, something like integrations, video, images, things like search, things like that. Not a lot of context, not a lot of useful information.

Amy Rudersdorf: 18:49

The other thing to keep in mind is these have to be actionable. So you can’t say “fast upload.” Every vendor is going to say “yeah, our upload is super fast.” But you could say, “As a creative, I need to upload five gigabyte video files in under 30 seconds” or something like that. You want them to be something that a vendor can respond to so that you get a useful response.

Chris Lacinak: 19:24

How might you explain what a well-crafted usage scenario looks like?

Amy Rudersdorf: 19:30

Sure. So usage scenarios are, as I said earlier, they’re these step-by-step narratives. So it’s a story about a user moving through the system. They flow in a logical order. They cover all of the relevant steps, all the decision-making points. They are user-centric. So the scenario should define who the user is. We always use real users in our usage scenarios. So we’ll have identified some of the major personas from the client’s organization. And that might be, like I said, a creative. It might be the DAM Manager. It’s real people who work in their organization. We don’t name them by name, but we name them by their title. So that this is truly representing the users. So it’s the story of the user performing tasks. And every usage scenario should have clear objectives, outlining what the user is trying to achieve. And it’s their specific tasks. They’re solving a problem. So it might be, for example, just this isn’t how you would write it, but it might be a story about a marketing creative who needs to upload assets in batch and needs to ensure that metadata is assigned to those assets automatically every time they’re uploaded. And the DAM Manager is pinged when those new uploads are in the system so that they can review them. So that might be a story that you would tell in a usage scenario. It’s realistic. It’s based on real people. And it represents real challenges that users face.

Chris Lacinak: 21:26

Yeah, that makes a lot of sense. There’s a lot of things that we see in marketing and in communication around the power of stories. I can imagine that that is a more compelling and meaningful way to communicate to vendors. It makes me wonder, in your experience in working with organizations, you craft this story and someone listening might think that’s information that’s at the ready that just simply needs to be put into story form. But I’m curious, you put a lot of emphasis on discovery and talking to different stakeholders. And I’m just curious, how useful is this process to people within an organization coming up with these stories? Are they at the ready? Or is it through the discovery process that they’re able to synthesize and really understand to be able to put it into that form?

Amy Rudersdorf: 22:21

Yeah, I would say if you take nothing away from this discussion except the fact that discovery is absolutely necessary as part of your technology procurement process, it’s that. Discovery is the process of interviewing your users and stakeholders to understand what their needs are, their current pain points are, and what they wish the system could do. That’s it in a nutshell. And I have never had a core team or the person leading the project on the client side say, “Oh, I already knew all that.” Time and time again, their eyes are opened to new challenges, new needs from these users. So, it’s a really powerful process. I think this is taking it a little off topic, but just to ensure that you have buy-in from your stakeholders, bringing them in at the beginning of the process is key. So it’s a benefit for you in that you learn what they need, you learn how they use systems today and what they need the system to do in the future, but you’ve also kind of got them engaged in the process as well. They see that they’re important and that you’re making decisions on their behalf and thinking of them as the system is being procured. And all of that together, I think, is really powerful and can only make for a better procurement process.

Chris Lacinak: 23:58

Yeah. So, wow, it really does point out the value of the process. So earlier I was saying like, what’s the pitfalls or maybe someone doesn’t want to go through the RFP process, but like the RFP, I mean, let’s say somebody did just throw together an RFP without going through the process, it would be a very different RFP than after going through the process. And the process, and also it sounds like the process solidifies things that don’t manifest in an RFP. They actualize through greater adoption and more executive buy-in and in other ways that you wouldn’t have if you didn’t go through this.

Amy Rudersdorf: 24:35

Absolutely.

Chris Lacinak: 24:36

Let me ask about that, the buy-in side. So in discovery, well, not so much the buy-in, I think more about adoption here, but like one of the challenges has to be you talk to, let’s say 10 different people, each person has many requirements they want to list. And maybe one of those is in creative ops, maybe one is in marketing, maybe one is in more administrative role. Who knows? They are different stakeholders with different focus points and they all give you lots of requirements. And on one hand, I have to think it’s important for those to be represented so someone doesn’t look at it and say, “Well, it doesn’t have any of my stuff in there. This system’s not right for me.” On the other hand, it’s got to be such a huge load. It just makes me wonder, how do you get to prioritization to both represent but also make sure that the most important stuff is represented up front?

Amy Rudersdorf: 25:31

Right. Well, it’s definitely a team process. So the first thing I’ll say is just to provide a little context, when we do these requirements, these user stories, in the past, we would write 150 requirements. And we try really hard not to do that. It’s really hard on the vendors to ask them to respond to 150 requirements. And so we really try to synthesize what the users are telling us and really hone in on the key needs. Now that doesn’t mean that we disregard different users’ needs. But in some cases, their need is something that every dam can meet. So there’s no need to include that in the requirement list. You want to be able to search. They all can search, so that should be fine. But once you have got your requirements list, which I think in a healthy RFP is probably in 50 or so requirements range, then it’s up to the organization to prioritize those requirements. So as a company, we will write those user stories on behalf of the client. But then we give them that list and say, now prioritize these. This is your part of the process. And typically, this is the core team’s job. So when we work with a client, there’s usually two to four people who are part of the client core team. And they are either sitting in on the discovery interviews or reading transcripts or just really engaged in the process. So they understand what these priorities look like. So by the time they get that list, they should be able to, as a group, sit down and identify the priorities. And we prioritize based on the list we do is mandatory, preferred, and nice to have. So if there are some requirements that someone is noisy about really wanting to have in the list, we can always just call it nice to have. And they’re there. But then it’s not mandatory that the system is able to do it.

Chris Lacinak: 27:59

So it sounds like that’s done through a workshopping or group process where folks are able to discuss and talk about those. So that seems like that innately. Being able to be heard, have the conversation, and then even if it’s not called mandatory, you still feel like you got to have the conversation and it’s represented in some way.

Amy Rudersdorf: 28:23

Yeah. And I wanted to also say that gathering these requirements from the users is really obviously important, as I’ve said. But then engaging them throughout this process is also really valuable. And so not just asking them at the beginning what they need, but actually letting them come to demos and things like that, I think, is important as well. It’s going to make implementation and buy-in much more successful.

Chris Lacinak: 28:51

You’ve got these requirements. You’ve got these usage scenarios. You create a bunch of things to hand over to a vendor. I guess I’m wondering, how do you manage apples to apples comparisons? Because there’s going to be such a wide variety in how they respond to things. And how do you manage comparing pricing to make sure that there’s not surprises down the road? How do you manage those things?

Amy Rudersdorf: 29:16

Well, so I’m going to set the pricing question aside for a second. So the way that we do it at AVP is, I think, a methodology that is unique to the RFP process. And that is that we’ve created a qualitative methodology. So we create the requirements, and the client prioritizes them. The vendor responds to them in a certain way. And then we’re actually able to score those responses. And it’s based on priority. So if something’s mandatory, it’s going to get a higher score. The vendor may say it’s out of the box. They’re going to get a higher score. If they say it has to be customized to do that, they’re going to get a lower score. So we create this scoring structure that allows us to hand over to the client data that they can look at. So they’re actually seeing side-by-side scores for all of the respondents to the RFP. Pricing is really tricky. It is so complicated. Every vendor prices their system completely differently. And so we really have to spend a lot of time digging out the details to understand where the pricing is coming from and what the year-to-year pricing looks like. And then we do actually provide a side-by-side analysis of that as well. It’s really tricky to do it. But in the end, the client gets data that they can base their decisions on. And then you asked a question about avoiding surprises when it comes to pricing. I think this is the hardest thing to talk about when you’re buying technology. And I think this is probably the case for lots of different types of technology, not just the DAM, MAM, PIM, PAM world. But this information is not widely available on the web. You can’t go to a vendor’s website and see how much it’s going to cost for you for the year, an annual subscription or license. And the reason for that is that there are so many dependencies around their pricing, including how much storage you need and what that storage growth looks like over time, how many users and what type, some vendors base their pricing on seats, like the number of users you have and the different types of users in their different categories, SLA levels, service level agreement levels. So if you want the gold standard, it’s going to cost this. So the costs are going to be unique to your situation. Just to sort of toot our horn that we know this market really well. And so if somebody says, how much does it cost for an annual license to vendor X? I can say, but that just comes with years of experience. Otherwise, it’s a wild west out there as far as pricing goes.

Chris Lacinak: 32:48

You know that I know that you want to get your hands on Amy’s how to guide and handouts for DAM selection. Come closer and I’ll tell you where to find it. Closer. I don’t want anyone else to hear this. Okay. It’s weareavp.com/creating-a-successful-dam-rfp. That’s where the guide is. Here’s where you get the handouts. It’s weareavp.com/free-resources. Okay. Now delete those URLs once you download them. I don’t want that getting out to just anyone. All right. Talk to you later. Bye.

Two thoughts here. One is, um, I mean, you talked about the kind of like spreadsheet analysis and scoring. But I know you, you dive deeper than that. I mean, part of your comparison, comparative analysis process is also demos as well. And I imagine that that, that plays, that makes me think of a couple of things. One is like one using those as a tool in the apples to apples comparison. But two, like I imagine, you know, you have this list of requirements and uses scenarios and some solutions can probably meet that out of the box. And some probably need some custom development to do it or some sort of workflow development or something in order to meet those. So could you just talk a little bit about the role of demos and custom configurations related to pricing?

Amy Rudersdorf: 34:20

Yeah. So a demo is a general term, um, that can mean many different things in this, in this realm. Um, so vendors love to give demos, uh, and they would love to, you know, spend an hour and a half with you telling you how great their system is. That’s their job. Their system may be great. And, and so, you know, that’s, that’s okay, but that’s not how you base a decision, a purchasing decision. Um, you, you, you go, you see those, those demos, those sort of bells and whistles demos to get a sense of what the system looks like. What we do is, um, after the RFP comes back and you know, we’re sort of playing with different ways to do that now. Um, but the way that we’ve done it typically is that after the, the, um, RFP comes back, let’s say you get six responses, you choose your top three, and then you spend two hours in a demo with the, with the vendor. The vendor does not get to, um, uh, make the agenda. We do. And in that demonstration, they’re going to, um, respond to the, some of the usage scenarios that we wrote for the RFP. So for 15 minutes, talk to us about that uploading, um, usage scenario I mentioned earlier. And in order to do that, here are assets from the organization and metadata from the organization, um, that you must use in your examples. So now you’re seeing side by side, um, demonstrations of how the systems work with your data. And I think that’s really powerful, um, because now you’re going to start to see the system maybe move a little slower with that five gigabyte movie that you have. Um, and, and, and it’s not quite as slick as the, as the, the assets they use typically in their, in their demos. So you get to see a real sense of how the system works in that way. And as part of those demonstrations, we always have the clients fill out feedback forms. So again, um, we’re going to get some, some qualitative, um, responses like what, what did you like? What other questions do you have? But we’re also going to get quantitative responses, score this vendor, um, on use the, on the usage scenario that you saw, what, you know, from one to five, did they, did they do what they said the system could do? And so again, we’re, we’re trying to set up opportunities for that apples to apples, um, uh, comparison. And how about the, um, kind of custom configuration aspects? I guess this goes, really goes back to kind of, I guess it’s both pricing and timeline, right? Like how do you manage that through the RFP process? I think that’s really probably one of the toughest things. The vendors differ on how involved they want to be in customization and configuration. Some systems require lots of configuration, um, but not so much customization. And maybe we should define those terms. So configuration means, you know, pressing some buttons behind the scenes to make something happen. Um, maybe turning on a feature, turning off a feature. Customization means writing some code to make the system do what you need it to do. So configuration should be cheaper and easier than customization. And so, uh, from a configuration perspective or from, from a cost and timeline perspective, configuration is, is less of a challenge. Um, because typically the, the vendor can do that. And that’s part of the, the offering. Customization is different. Uh, if something is custom, we ask them to tell us how much time it’s going to take and how much it’s going to cost to do it. Uh, so that that’s in, that’s in their proposal as well. In order to get to that point can be challenging. You really have to be very specific and clear about what you need. Um, so an example would be integration, which is something that everyone asks for, um, in an RFP. DAMS aren’t systems that just stand alone in your organization. They integrate with collections management systems or marketing technologies. Um, and so understanding for instance, who is responsible for building the integration and maintaining the integration. Uh, knowing that upfront is super important. If a vendor says, yeah, we can do that. Make sure they explain how that happens and what it’s going to cost and what the real cost is going to be for you. Um, so I, I guess I just say that you’re your best advocate and, and if you have a question, ask it and ask them to, to, um, document it.

Chris Lacinak: 39:44

Speaking of vendors, like what, what have you heard as responses from vendors to, you know, the, the RFPs that you’re proposing people do in this process. Do they love them? Do they hate them like that? How have they been received by vendors generally speaking?

Amy Rudersdorf: 40:01

Um, well, I’ll, you know, we have actually reached out to vendors and asked them this question and I have heard on a number of occasions that they really like the RFPs that we put together for them because they’re so, they’re so clear and they understand what we’re asking and why we’re asking it. And you know, going back to this, this point I made earlier about not having 150 requirements, you know, the vendors appreciate that as well. It’s a, it’s a lot of work for them to respond to these and, and we, we don’t want this to be onerous, um, or overly complicated for them. So we’ve really tried to create RFPs that serve the client foremost, but also, um, make the process as pain free as possible for the vendors as well. And we’ve gotten feedback from them, from a number of them that they like, um, the way that we present the data.

Chris Lacinak: 41:04

Having been someone that’s been on the responding side of RFPs, I will say, you know, one of the things you worry about when you’re in that position is the, uh, customer being able to make an apples to apples comparison, making sure that the appropriate context is there, making sure that they fully understand, um, and that you have all the right information to be able to provide the right responses. So I guess everybody has a vested interest in being clear and transparent, right? That’s actually helpful to everybody. And I imagine that also helps people like opt out. Maybe a vendor says, you know what, we’re not, they look at that RFP and they say, this really is not our strong spot. We should not spend the time on this. So that’s probably helpful to them to be able to filter out what is and isn’t in their wheelhouse.

Amy Rudersdorf: 41:50

Yeah, absolutely. I, I think it’s important to recognize that we don’t work in a vacuum. Um, we, we work very closely. We are vendor neutral as a company, but we work very closely with, um, the sales teams at lots of different, um, vendor companies. And we want to be partners with them as well. We want, we want them to be successful, um, whoever they are and whatever we can do to make sure that they’re able to, um, show off their system as well at, and as appropriately as possible, you know, that’s a win for everyone. And so I do really keep in mind that perspective when I’m putting these, um, documents together.

Chris Lacinak: 42:40

So what are some of the things that you’ve heard vendors complain about with regard to RFPs? Not ours, of course, other people’s RFPs. Like, what are the things that would turn a vendor off or make them not want to respond or make them feel poorly about an RFP?

Amy Rudersdorf: 42:57

I think I’ve mentioned this now a couple of times. I think one of the common deterrents is just the overwhelming number of requirements. And when they’re not written as user stories, they can be really confusing, hard to interpret. Um, and, and just really probably pretty frustrating to, um, try to answer or respond to. The other challenge, and I talk to clients all the time about this is you can’t make every requirement mandatory because there is not going to be a system out there that can do absolutely everything you want out of the box turnkey solution. Um, and it’s, it’s unreasonable to ask for that, I think, in my opinion. Um, and so, you know, making sure that you’re really prioritizing those requirements helps vendors see that you’ve really thought about this and that you, um, understand what you’re asking for and what your needs really are. So I think that maybe isn’t a deterrent, it’s a positive, but flipping that every, every requirement being mandatory is, um, is probably really frustrating. I would say too that, I mean, there’s, there’s sort of the flip side of this. Um, there’s excessively detailed or overly complex, and then there’s not enough information to, to provide a, a useful response. Um, so finding that sweet spot where you’re giving them the context, the background, the information they need, um, but not overwhelming them is, is, is, um, important. And I, you know, we’ve all seen a poorly structured RFP, you know, something that lacks clear vision or is ambiguous or vague, or, you know, is filled with like grammatical errors and spelling mistakes. It just makes everybody look bad. And, you know, if I was responding to that, I would question, um, the organization and their sort of dedication to this process.

Chris Lacinak: 45:04

We should, we should point out that you’re a hardcore grammarian.

Amy Rudersdorf: 45:08

I am.

Chris Lacinak: 45:09

So, um, let’s talk about timeline. What, you know, you talked about who is this, who’s the guide and checklist for, and you said it’s for people who are maybe getting their first DAM. Maybe it’s for people who are getting their second or third DAM. They’ve already got one. When is the right time to start the RFP process? People are surprised at how long this process takes. At AVP, it is a 20 week process. And so that’s five months. And that is, uh, that is sort of keeping all the, the, the milestones tight, moving the process along quickly. Uh, that’s, that’s just how long it takes. Um, so, you know, thinking about things like, Oh, my contract is coming up in a year, you know, working backwards from that, you need a solid six months or more for implementation. So, you know, you should, you should be, um, working on that RFP now. Uh, but, you know, give yourself, you know, expect this process, if you do it right, to, to take a solid four or five months. Um, and, and then you also have to, you know, build in buffer for your procurement office. You’ve got your InfoSec, uh, reviews. All of these things can take even longer. So, um, yeah, as soon as you realize that you’re going to get a new system, uh, start the work on that RFP.

Chris Lacinak: 46:40

And we should say that five months that you mentioned includes, uh, a pause while you wait for vendors to respond to the RFP as well. Right. And how long is that period typically?

Amy Rudersdorf: 46:54

Um, I, I usually say a month, I think less than a month is not, is not being a, a good um, you know, I think it’s sort of, uh, inhumane to make them respond in, in less than a month. These are complicated. They want to make sure they’re getting it right. We want them to get it right. So a month is a solid amount of time. We also build in time where they can ask questions and so they can’t really start working on it until they get the questions, the answers back. So a month I think is the, is the sweet spot there.

Chris Lacinak: 47:28

You’re right. I, I feel like we run into frequently where people might hear five months and they’re a little put off by how long that sounds. Uh, and then it is extraordinarily common that, uh, contracting and security takes significantly longer than that to get through. So that is something I think people often underestimate, especially if you’re not used to working through procurement, like that’s something that people really need to consider as a, as a part of their timeline.

Amy Rudersdorf: 47:57

This is a real, this happened yesterday. I have a client who was very adamant that we, um, shorten that time, time frame by a month. So we had compacted all of our work into four months and they came back to me and said, you know, we’re going to need more time. So let’s, let’s go with your original timeline. And then he said, it’s like, you know what you’re doing.

Chris Lacinak: 48:24

Yeah, yeah, yeah. That happens sometimes. So I mean, I do what, you know, I have, there has been this concept lately though, that I’ve heard repeated consistently about a fast track selection process and it’s something that’s significantly faster. And I wonder, I don’t know, do you have thoughts about that? Is that a realistic thing? Does that sacrifice too much? Is it possible as long as you’re willing to accept X, Y, and Z risks? I mean, what’s the fastest you might be able to do a selection process if someone really pushed you?

Amy Rudersdorf: 49:00

That’s a tough question. I mean, there are, there are people who talk about this fast track process. I think you’re putting yourself at risk if you don’t at least spend the time you need to with your end users and stakeholders. Whatever else you do around this process to make it go faster for you, you know, whether it’s not do the RFP and just invite vendors to do their demos. I still think spending the time with your stakeholders is going to be really important and drafting their requirements in some way that communicates those to the vendors so that when you have them demo, you have them demo with your user needs in mind. You know, I think you could do that. I’m not entirely sold on it. I think our process works really well. But if someone came to us and said, “We want to do it a different way,” I think we’d be willing to discuss other methods.

Chris Lacinak: 50:08

It makes me think, you know, if you were going to have, say, I’m thinking of a shape, Amy, and I want you to draw it, and I’m going to give you a number of dots to draw the shape that I’m thinking of, right? If I give you three dots, the chances of you getting that shape right are pretty slim. If I give you 50 dots, you’re more likely to get the shape that I’m thinking of, right? You can draw the line and connect the dots. And it seems that if you fast track it, you’re going to miss some dots and you’re less likely. And as we talked about earlier, like, I guess I do want, I mean, now that we’re talking about it, it’s like weighing the risk reward here. Like, okay, let’s say that the fastest you could do this with some level of certainty that everybody was willing to accept was three months. But you increase your chance of getting it wrong by 30%. We talked earlier about what are the risks of getting it wrong. Like, that just seems on its face obvious that that’s not worth it. Like, the amount, it’s not just the cost of the DAM system. Because to get back all those stakeholders again, do discovery again, go through the process. Like, everybody’s burnt. They’re unhappy about it. The thing didn’t work. It failed. I don’t know. It just seemed, yeah. Now that we talk about it, it just seems obvious that that’s not a great idea.

Amy Rudersdorf: 51:25

You’ve broken their trust. And if you, and I’ve seen this in implementation too, where if you invite your stakeholders into the system before it’s ready, or it’s not doing what they need it to do, they’re going to hesitate to come back. And to have to go through this process all over again, I just can’t see, you’re going to lose their trust.

Chris Lacinak: 51:56

You can imagine the Slack message already. Hey, did you see that? I went in there and nothing’s in there. I couldn’t find anything. It’s like all of a sudden that starts creating a poor morale around the system.

Amy Rudersdorf: 52:07

Yeah, it gives me shivers.

Chris Lacinak: 52:10

Yeah. In your piece, you go through the whole RFP process. We haven’t gone through that here because it’s rather lengthy and I think that it’s a lot to talk about. So we’ll leave that to folks to see in the piece. But I’m curious if you could tell us, when you see people do this on their own and they don’t have the advantage of having an expert like yourself guiding them along, what’s the number one most important part of the process that you see people skip?

Amy Rudersdorf: 52:40

Well, I think it’s the discovery process. It’s getting in front of your users and stakeholders. Without that information, you don’t know what you need. And you can only guess at what you need based on your personal experience.

Chris Lacinak: 53:00

So people think, “Oh, I know what my users need. I’ve been working with these people for years. I can tell them.” Or maybe like, “I know what we need better than anybody else. I’m just going to write it down.”

Amy Rudersdorf: 53:08

I don’t know if I said this already, but I’ve never had anyone say, “Oh yeah, I knew all that,” after they went through the discovery process. Time and again, they’re like, “Wow, I had no idea.”

Chris Lacinak: 53:18

I bet.

Amy Rudersdorf: 53:20

Yeah. It’s pretty interesting to talk to the core team after the discovery process is complete. Because they often sit in on these interviews and you can just see their eyes pop when they hear certain things that they had no idea about. And that happens every time we go through this process.

Chris Lacinak: 53:48

So we’ll put a link to your piece in the show notes here. I’m curious though, if you could tell us when people download the handouts, what’s in there? What can people expect to see?

Amy Rudersdorf: 54:01

It’s six checklists that guide you through the entire RFP process, from developing your problem statement to the point where you’re selecting your finalists. Some of the checklists are things that you need to do. So they kind of step you through discovery and how you structure your RFP. But then there are checklists that you can actually include in your RFP. We always have an overview document that sort of introduces the RFP to the vendors. And there’s a very long checklist that we include that they have to answer those specific questions that are in that download. They’re in the actual RFPs that we create as well. So it’s a little bit of, we’re offering a little IP to users.

Chris Lacinak: 54:56

So it’s the things that you would use for yourself as part of the process.

Amy Rudersdorf: 55:01

Yeah.

Chris Lacinak: 55:02

That’s great. So for the question I ask everybody on the DAM Right podcast, which is, what is the last song that you added to your favorites playlist?

Amy Rudersdorf: 55:12

Oh, I’ll tell you right now. I have it right in front of me.

Chris Lacinak: 55:17

Great.

Amy Rudersdorf: 55:18

Heart of Gold by Neil Young.

Chris Lacinak: 55:19

What were the circumstances there?

Amy Rudersdorf: 55:21

He’s back on Spotify. He had left Spotify. They pulled all of his stuff off Spotify. And I realized he was back. And so I grabbed that song. Yeah. Four days ago.

Chris Lacinak: 55:32

That’s right. Well, thank you so much for joining me and sharing your expertise and your experience and all this great information. It’s been fun having you on. I really appreciate you taking the time.

Amy Rudersdorf: 55:41

Yeah. Thanks again for the opportunity to talk about a topic that some people might not find very exciting, but I do.

Chris Lacinak: 55:52

You know that I know that you want to get your hands on Amy’s how-to guide and handouts for DAM selection. Come closer and I’ll tell you where to find it. Closer. I don’t want anyone else to hear this. Okay. It’s weareavp.com/creating-a-successful-dam-rfp. That’s where the guide is. Here’s where you get the handouts. It’s weareavp.com/free-resources. Okay. Now delete those URLs once you download them. I don’t want that getting out to just anyone. All right. Talk to you later. Bye.

Manage Your DAM Expectations: A Guide to Successful Implementation

25 July 2024

In the world of digital asset management (DAM), the journey of implementation can often feel overwhelming. However, with the right approach and mindset, organizations can navigate this process smoothly. This guide explores key insights on managing expectations during a DAM implementation, drawing parallels to the experience of moving into a new home.

Understanding the Need for a DAM System

Every successful DAM implementation begins with understanding the reasons behind the need for a new system. Organizations often face several pain points, leading them to seek a more efficient solution. Here are some common reasons organizations consider moving to or adopting a new DAM system:

- Centralization of Assets: Staff frequently struggle to find images or videos scattered across various platforms like Dropbox, Google Drive, and email. A DAM system centralizes these assets, making retrieval easier and faster.

- Control Over Asset Usage: Misuse of assets is a significant concern. Organizations often find their images on social media or websites without proper permissions. A DAM system helps establish control over how assets are used.

- Unlocking Hidden Treasures: Many organizations have digitized assets that remain underutilized. A DAM system can help make these assets available for broader use.

Deciding What You Need

Once the reasons for adopting a DAM system are clear, the next step is to define what is needed from the system. Organizations should identify three to five key differentiators or deal breakers when evaluating potential DAM solutions. Here are some considerations:

- Budget: Always a crucial factor, understanding the financial implications will guide your decisions.

- Technical Requirements: Determine whether you need on-premise hosting or prefer a vendor-hosted solution.

- Format Compatibility: Ensure the DAM can handle specific file formats essential for your operations, such as InDesign files.

- Functional Needs: Identify critical functionalities, like full-text search capabilities, that the system must support.

Planning and Scoping Your MVP

Creating a clear plan and defining a Minimum Viable Product (MVP) are essential steps before implementing a DAM system. Unlike moving into a new home, where the process is somewhat familiar, DAM implementation can be murky for many organizations. Here are common pitfalls to avoid:

- Resource Allocation: Organizations often underestimate the internal resources required for implementation, sometimes needing one person to focus full-time on the migration process.

- Major Migration Planning: If moving a large volume of data, thorough planning is critical. Transferring data from multiple systems can be complex and time-consuming.

- Over-Ambition: Organizations sometimes aim to do too much before going live. Focusing on core features that work well is essential to avoid extending timelines unnecessarily.

Maintenance, Enhancements, and Repairs

Just like maintaining a house, a DAM system requires ongoing care. After implementation, it’s essential to ensure that the system remains organized and functional. Here are tips for effective maintenance:

- Daily Maintenance: Regularly check and tidy up the system to ensure it operates efficiently.

- Ownership and Oversight: Assign someone to oversee the DAM, particularly in the initial months after launch, to address any issues promptly.

- Resource Allocation: As the DAM system grows in popularity, be prepared to allocate more resources to maintain its success.

Don’t Go It Alone

Implementing a DAM system is not a solitary journey. Just as you would seek help from a realtor or lawyer when buying a home, organizations should consider enlisting experts in DAM. Here’s how to find the right support:

- Consultants and Specialists: Engage professionals who have experience in DAM implementation to guide your organization through the process.

- Communication Teams: If you aim to promote the DAM widely, consider involving internal communications teams to help socialize and promote the system.

- Expert Organizations: Partner with firms that have expertise in metadata, taxonomy, and asset management best practices to ensure a smoother implementation.

Conclusion

Implementing a DAM system can be a transformative experience for organizations, but it requires careful planning, resource allocation, and ongoing maintenance. By understanding the reasons for adoption, defining needs, and seeking expert help, organizations can navigate the complexities of DAM implementation successfully. Remember, just like moving into a new home, the journey may have its challenges, but the rewards are well worth the effort.

For further insights and resources on DAM implementation, consider exploring specialized DAM consultants and their offerings.

Transcript

Chris Lacinak: 00:00

Hello, welcome to DAM Right.

I’m your host, Chris Lacinak. Today, we’re gonna try something a little different.

We’re gonna do a short episode

that’s about 10 minutes instead of 60 to 90,

and I’d love to know how you feel about it.

Let me know at [email protected].

Today’s episode is an interview with Kara Van Malssen,

who you know if you’re a listener of the show.

If not, I’ll say quickly that Kara is a Partner

and Managing Director at AVP,

a thought leader in the DAMosphere,

and an all-around wonderful person.

The interviewer is former AVP Senior Consultant,

Kerri Willette.

Since doing this interview, Kerri has moved on

and is now doing awesome work, no doubt, at Dropbox.

Kerri is a super talent and pure delight of a human being.

Since we’re keeping this short,

I’ll just quickly say that I really love

how Kara makes the analogy between DAM implementation

and moving into a new home.

She grounds the topic of DAM implementation,

making it both fun and relatable.

I know you’ll enjoy it.

Speaking of which, please go like, follow, or subscribe

on your platform of choice.

And remember, DAM right,

because it’s too important to get wrong.

Kerri Willette: 01:07

We’re here today, we’re gonna talk about

some things that were inspired by the article that you wrote for Henry Stewart’s publication

in the Journal of Digital Media Management,

I think it was volume seven.

That article subsequently evolved into a blog post

that I know you wrote after relocating.

And the blog post is called “Manage Your DAM Expectations.

Or How Getting a DAM is Like Buying and Owning a Home.”

All right, so tip one,

there’s usually a good reason for doing it.

Kara Van Malssen: 01:39

Yeah, so we had an opportunity in another city,

my husband got a job offer. So within five months, we had sold a house,

moved, bought a house, and moved again.

It was quite a lot.

Kerri Willette: 01:52

So what are some of the good reasons

that you’ve heard from organizations who are looking to move to or switch

or get a new DAM system for the first time?

Kara Van Malssen: 02:02

Yeah, so it usually falls into a few different buckets.

Like a lot of times it’s around pain points that they’re having.

So it might be things like,

staff’s trying to find images or videos

and they’re rummaging through Dropbox and Google Drive

and email and hard drives and who knows where,

trying to find what they’re looking for.

And it takes forever and they don’t find it.

So centralizing the assets is one good reason.

Another one we see a lot is maybe misuse of assets

where you’ve got people putting images on social media

that they shouldn’t be using or on the website

that they don’t have permission to use for that purpose.

And so trying to kind of get some control

around the usage of the assets

is another reason we see a lot.

And then another reason might just be

to kind of open up like a new treasure trove of assets

that was previously sort of hidden.

Like maybe you digitized a whole bunch of stuff

and you wanna make that available.

So that’s another good reason.

Kerri Willette: 03:06

So the next tip in your post,

you have to decide what you will need. How do you feel like organizations can answer the question

of what they need in a DAM system?

Kara Van Malssen: 03:16

You’ve gotta figure out what those three to five

or four to six like key differentiator things are or the real deal breakers.

And one of those is always gonna be the budget,

but the other things are unique to you.

Maybe it’s technical things

like you need to host this on-premise

or you need to host it in your own

Amazon Web Services account

or maybe you want the vendor to host it for you.

So those might be some of those considerations

or maybe they’re things like format requirements.

Like you want specific support for InDesign files,

for instance.

Or maybe it’s functional things

like you really need full-text search of documents.

Like that’s critical.

So you don’t wanna look at systems that don’t have that.

It’s like that’s one of your deal breakers,

things like that.

So you’ve gotta kind of figure out what are those top fives

that you really need to have in the DAM

and you can use that to sort of narrow down

the candidate solutions.

And then when you start to evaluate those,

you can really look for the kind of nuance differences

between them and how they actually help you achieve

the goals that you have in mind.

Kerri Willette: 04:28

Yeah, that makes sense.

So tip three in your blog post talks about making a plan and clearly scoping what you call a minimum viable product

or MVP version of what you need.

And you would do that before implementing a DAMS.

We all know that moving requires a lot of planning,

but what are some areas you’ve seen organizations

that you’ve worked with most often not plan well

for implementing a DAM?

Kara Van Malssen: 04:57

There’s a big difference here between moving a house

and moving into a DAM. You kind of know what’s involved

in the moving house situation.

You know, it’s gonna be like a lot of packing

and organizing and then unpacking and organizing.

But with a DAM, a lot of people

haven’t really done this before.

So it’s a little murky,

like what are the things you need to do?

So what we see is, I think, three things that people,

where they might go wrong here.

So one is they’re not allocating enough resources internally

to the implementation and the migration.

And, you know, it’s probably gonna be like

one person’s full-time job for a while.

So just something to keep in mind.

Another is just not really planning

around major migrations.

If you’ve got a lot of data to move

from one system to another,

or from maybe ten systems

or ten different data stores to another,

it’s just, that’s a lot of work.

It takes time and planning.

And then the last one is kind of getting overly ambitious,

maybe not realizing that you’re doing it,

but, you know, trying to kind of do everything

before you go live.

And maybe that’s including like custom integrations,

maybe custom development on top of the

kind of out of the box features of the system.

It’s like if you got a contractor

and you decided to gut renovate the house

before you moved in,

you better expect that’s gonna take you some time.

So you’re not getting in that house really anytime soon.

But this is an organization,

there’s politics, there’s budget,

there’s like, you know, expectations.

And if the thing drags on for too long

before it gets launched,

that can really damage the reputation of this program.

It can kind of lose political will.

So it’s important to kind of scope something

that’s realistic to just get it off the ground

and get those core features working really well.

So things like just making the search work,

the browse work,

making sure the assets are well organized,

making sure they’re well described and tagged,

that people can easily access them when they should

and they can’t access them when they shouldn’t.

So roll out those key features,

get it in the hands of people

who are gonna give you really good feedback

and gonna start with it.

And then you can get those additional things over time.

Kerri Willette: 07:18

Great.

Tip four, maintenance, enhancement and repairs come with the territory.

So Kara, I happen to know

that you recently discovered a gas leak in your new house.

And luckily you were able to get it repaired really quickly,

but it definitely, I think, brings home your point

about allocating resources for future maintenance

and how that relates to home buying for sure.

So how does that relate to your experiences

helping organizations deploy their DAM systems?

Kara Van Malssen: 07:49

Yeah, it’s like with the house,

you’ve kind of got a gamut of kind of home maintenance and repair and improvement that you’re doing.

Like you’re gonna be cleaning every day,

tidying it up, cleaning the kitchen.

You’re gonna be kind of repairing those things that break

and then you’re gonna be making improvements over time.

It’s really the same thing with a DAM.

You’ve gotta have kind of somebody in there

who’s just making sure everything’s tidy and neat

so that the thing continues to work well for the users.

You’d have to make sure that there’s some ownership

and oversight of the DAM from the very beginning,

especially in those critical,

like first few months after launch.

And then over time,

you might find you even need more resources there

than you thought you would

because maybe it becomes really successful and that’s great,

but you’re probably gonna need to throw a bit more manpower

at it to make sure it continues to succeed.

Kerri Willette: 08:43

All right.

Don’t go it alone. What kind of experts, when it comes to DAM systems,

what kind of expert help might be useful?

Kara Van Malssen: 08:53

Yeah, so it’s like, if you’re getting a house,

you know, you’re probably gonna get a realtor, you’re gonna need a lawyer to help with the closing.

You’re gonna probably have a home inspector

come and check it out before you buy it.

Some of those things you might take on yourself,

but sometimes you’re gonna work with others.

And it’s sort of the same thing with a DAM.

A lot of people, I think,

just figure like, I can do this, let’s do this.

But if you’ve never had any experience implementing a DAM

and you kind of don’t know what that path forward looks like

or what the expectations might be

or where you might run into problems,

it can be really hard.

And if you are doing things like in a custom integration

with other applications,

you might need people like developers.

You know, if you’re really gonna be promoting this widely,

if you have a lot of users, you’re trying to get to adopt it

you might need like communications folks

maybe within your organization

to kind of help socialize it and promote it.

And also, you know, organizations like ours, AVP.

So we are experienced in this.

We have a lot of expertise in things like metadata,

taxonomy, search and navigation,

asset organization, management, best practices

and things like that.

So we’ve been down this road before.

So we can also help you kind of manage your expectations

a little bit and try to get to as much

of a painless launch as possible.

Kerri Willette: 10:14

Well, thanks, Kara.

This was really great. It was nice talking to you.

Kara Van Malssen: 10:18

Yeah, thanks, Kerri.

Appreciate it. (upbeat music)

The Critical Role of Content Authenticity in Digital Asset Management

11 April 2024

The question of content authenticity has never been more urgent. Digital media has proliferated, and advanced technologies like AI have emerged. Distinguishing genuine content from manipulated material is now crucial in many industries. This blog examines content authenticity, its importance in Digital Asset Management (DAM), and current initiatives addressing these challenges.

Understanding Content Authenticity

Content authenticity means verifying that digital content is genuine and unaltered. This issue isn’t new, but modern technology has intensified the challenges. For example, the FBI seized over twenty-five paintings from the Orlando Museum of Art, demonstrating the difficulty of authenticating artworks. Historical cases, like the fabricated “Protocols of the Elders of Zion,” reveal the severe consequences of misinformation. Digital content’s ubiquity makes it vital for organizations to verify authenticity. Without proper measures, content may remain untrustworthy.

The Emergence of New Challenges

Digital content production has skyrocketed in the last decade. Social media rapidly disseminates information, often without verification. Generative AI tools create highly realistic synthetic content, complicating the line between reality and fabrication. Deepfakes can simulate real people, raising serious concerns about misinformation. Organizations must combine technology with human oversight to navigate this complex environment.

The Role of Technology in Content Authenticity