Uncategorized

A DAMn Good Investment

24 June 2025

When the going gets tough, the tough get investing.

With economic instability, the pressure is on leaders to tighten belts yet remain top of mind for target markets. In 2025, the global economy has been wildly unpredictable with tariffs, layoffs, and consumer confidence unstable. And, one of the biggest mistakes I see business leaders make during times of uncertainty is cutting their marketing and advertising budgets altogether. To unlock the full potential of a company’s data for informed decision-making, it is essential that data be accurately recorded, securely stored, and properly analyzed. This becomes especially critical during economic downturns, when financial scrutiny intensifies and every margin matters. Data presented to prospects and existing customers must be precise to ensure that services and differentiators are clearly and correctly communicated. Internally, the accuracy of data shared with executives and analysts can directly influence client retention, strategic direction, and budget planning.

This is also a matter of operational efficiency. Even with effective employee training, the benefits can only be realized if teams are working from a consistent and reliable source of truth … DAM. Establishing this foundation is an investment that relies more on strategic time allocation than significant capital expenditure. To position itself for future growth, a company cannot afford to be complacent when evaluating potential technology investments. In a fast-moving digital landscape, organizations that delay improvements during slow periods risk falling behind. In contrast, companies that make deliberate investments—whether through new systems or by dedicating employee time to development and training—will be better prepared to seize emerging opportunities and showcase their competitive advantages as conditions improve.

This is a good time to invest in DAM.

Change is a Good Investment

Change is as present as it is pervasive. It is good to recognize, acknowledge and accept that change is happening in business, and to learn not only what that means for you and your team, but to be ready for those new opportunities. So, why do we change?

- We change to advance forward.

- We change to make ourselves stronger.

- We change to adapt to new situations.

Without change, there would be no improvements. If business is about growing, expanding and making things better for your customers, then what changes are you making? As many of us begin to see future recovery, I too look to the horizon and know that better days are ahead for us all. Whether you’re undertaking an improvement, an upgrade or modernization, whatever you call it, any such effort is holistic by design, encompassing all aspects of business. Many businesses have taken this time to focus on improving all aspects of their business that affect people, process, and technology. This is about good and positive access to information from many systems to not hinder but enable our work. Watch for signs and respond well. Improvement for all is a good thing. In business, we always aspire for stability but need to be prepared for the opposite. This is about both insurance, and investment.

Invest in DAM

The demand to deliver successful and sustainable business outcomes with our DAM systems often collides with transitioning business models within marketing operations, creative services, IT, or the enterprise. You need to take a hard look at the marketing and business operations and technology consumption with an eye toward optimizing processes, reducing time to market for marketing materials, and improving consumer engagement and personalization with better data capture and analysis.

Time to Transform

To respond quickly to these expectations, we need DAM to work within an effective transformational business strategy that involves the enterprise. Whether you view digital transformation as technology, customer engagement, or marketing and sales, intelligent operations coordinate these efforts towards a unified goal. DAM is strengthened when working as part of an enterprise digital transformation strategy, which considers content management from multiple perspectives, including knowledge, rights and data. Using DAM effectively can deliver knowledge and measurable cost savings, deliver time to market gains, and deliver greater brand voice consistency — valuable and meaningful effects for your digital strategy foundation.

Future-Proof your Content

Consider the opportunity in effective metadata governance: do you have documented workflows for metadata maintenance? Are you future-proofing your evergreen content and data? Remember to listen to your users, to keep up to date and aware of your digital assets, and leverage good documentation, reporting, and analytics to help you learn, grow and be prepared. If you are not learning, you are not growing. If you are not measuring, then you are not questioning, and then you are truly not learning.

Conclusion

Keep the lights on. Now is the time to get smart and strategic with your money to ensure you can weather the current unpredictability and even come out ahead. Tariffs, recession fears, rising prices, and potential layoffs dominate headlines right now. As you look to the second half of the year, this might be causing you to take a close look at budget forecasts and reevaluate spending.

Play the long game. Marketing is a long-term strategy, and DAM is a cornerstone of Marketing efforts and operations. More than ever, there is a direct need for DAM to serve as a core application within the enterprise to manage these assets. The need for DAM remains strong and continues to support strategic organizational initiatives at all levels. DAM provides, more than ever, value in:

- Reducing Costs

- Generating new revenue opportunities

- Improving market or brand perception and competitiveness

- Reducing the cost of initiatives that consume DAM services

The decision to implement a DAM isn’t one to take lightly. It is a step in the right direction to gain operational and intellectual control of your digital assets. DAM is essential to growth as it is responsible for how the organization’s assets will be efficiently and effectively managed in its daily operations.

A DAMn good investment to me.

Video – DAM Governance Ask Me Anything with Kara Van Malssen & John Horodyski

27 February 2025

Watch as Kara and John answer hard hitting DAM Governance questions, providing powerful insights:

What you need to know about Media Asset Management

10 December 2024

While marketing strategies vary by company size or industry, they likely have one thing in common: a lot of content.

Every stage of the customer journey is powered by marketing content — from digital ads and social media posts to web pages and nurture emails. And if all the related workflows are going to run smoothly, all of the supporting assets need to be organized effectively.

That’s where media asset management comes in. Let’s take a look at this practice and how media asset management software can help teams achieve their content goals.

What is media asset management?

Media asset management (MAM) is the process of organizing assets for successful storage, retrieval, and distribution across the content lifecycle.

This includes any visual, audio, written, or interactive piece of content that supports a marketing goal. The list of possible marketing assets is long and can include:

- E-books

- Whitepapers

- Customer stories

- Reports and guides

- Infographics

- Webinars

- Explainer videos

- Product demos

- Podcasts

- And more!

In addition, all of these assets are produced with the help of many smaller creative elements, like images and graphics. The volume of these files grows exponentially…after all, one photoshoot alone can result in hundreds of images.

And if teams don’t have a centralized repository for their assets, finding a specific file requires a tedious search across shared drives, hard drives, and other devices — which can prove impossible without knowing the filename. Audio and video files can be particularly challenging to manage not only because they tend to be large but also because they are difficult to quickly scan. Sometimes files simply can’t be found and have to be recreated.

This content chaos all adds up to a lot of wasted time and resources…and frustration.

MAM software addresses this problem by providing teams with a single, searchable repository to store and organize all creative files — making asset retrieval a breeze.

How is media asset management software used?

While content management is important for all kinds of teams, MAM focuses on marketing assets and workflows. And the benefits of MAM are numerous — let’s explore some through common use cases.

Distributed marketing teams, one brand

It takes a village to bring a marketing strategy to life, and that village often includes numerous regional offices, remote workers, contracted agencies, and external partners. And all of these content creators and communicators need to be working towards a unified brand experience.

MAM software makes global brand management possible by providing a centralized platform to store and manage files, including brand guidelines and standards. So not only are dispersed team members working from the same playbook, but they are also using the same brand-approved assets — helping to ensure consistency across customer touchpoints.

Ease of use, powered by metadata

Marketing assets are central to workflows across an organization. For example, sales reps need current product materials for deal advancement and customer success managers use the same assets to support and educate existing customers. Their ability to work with agility depends on having these resources at their fingertips.

MAM software includes flexible metadata capabilities that power robust search tools, allowing users to locate an asset with just a few clicks — even in a repository of tens of thousands of files. Further, because MAM software offers easy-to-use versioning capabilities, users can be confident that assets are current. This efficiency accelerates workflows and fuels revenue growth.

Integrated systems and automated processes

A modern marketing technology (martech) stack includes numerous platforms to store, produce, and publish content, many of which include their own asset libraries.

By positioning a MAM system as the central source of truth for all content, teams can simplify content management and consolidate redundant tools. Powerful APIs and out-of-the-box connectors automate the flow of content from a MAM platform to other systems to ensure the same assets are used across digital destinations, without the need for manual updates across the content supply chain.

What are media asset management software options?

Organizations shopping for a MAM platform have an abundance of choices to consider. A simple search for “media asset management” on G2 — a large, online software marketplace — lists 82 products!

All of these platforms have big things in common. For example, most, if not all, are software as a service (SaaS) solutions in the cloud (versus on-premise software that is installed locally).

However, the specific features that each vendor offers can vary quite a bit. So the first step in any MAM software search is to clearly understand and outline the functionality needed for your unique workflows.

From there, you can really begin your research in earnest — or even start drafting a request for proposal (RFP).

The difference between MAM and DAM

In today’s enormous martech landscape media asset management overlaps with several other disciplines, including digital asset management (DAM).

While these two solution categories are similar (in name and practice), there are key differences.

DAM refers to the business process of storing and organizing all types of content across a company. This could mean files from the finance department, legal team, human resources, or other business units.

MAM, on the other hand, really focuses on assets that the marketing department requires, including large video and audio files.

So finding the solution that’s right for your team really starts with clarifying your functionality needs, including the types of files you want to store in your system and how you need to manage and distribute them.

Successful technology selection, with AVP

Is a MAM or DAM platform right for your organization? Further, which vendor is the best match for your marketing goals? While answering these questions can be hard, we can help.

AVP’s consultants have worked with hundreds of organizations to select the software partner that best fits their workflow and technology needs. If you’re dealing with content chaos, we’d love to hear from you.

Contact us to learn more about AVP Select — and how we can work together to achieve your content management goals, faster.

DAM system struggles? Don’t rush to blame the platform.

25 March 2024

No one could find anything. People were squirreling away photos and graphics on their personal Dropbox. Videos were on dozens of hard drives. People were misusing assets, violating license terms and brand guidelines. It was time to invest in a DAM system.

You tackled the tough implementation tasks, migrated assets, tagged them, configured the system with your DAM vendor’s help, and trained the users.

(Congratulations for getting this far, I know that was a lot of work).

Despite these effots, people still can’t find anything. They are still squirreling away graphics and video. They are still misusing assets.

Some people are blaming the DAM tool. But don’t give up on your technology investment just yet.

Here are 5 reasons why you aren’t getting the most from your DAM solution, and what to do about them.

1. People can’t find anything.

If users are having trouble finding assets in the DAM system (and coming to you to help them find stuff they should be able to get on their own), this may point to an underlying information architecture and/or metadata problem.

Consider the different ways people navigate a digital asset management system: keyword search, filters, curated collections, folder hierarchy. Examine each.

Start by talking to your users about how they search and browse (check out our 3-part series on designing a great search experience to learn more). Ask for real-life examples.

Next, take a look at the underlying data structures that enable search and discovery. Are there issues with metadata quality? Do people want to see different filters than the ones available? Are the curated collections meaningful and relevant? Does the folder structure make sense to them? Are you managing versions correctly?

Find what’s not working and prioritize improvements. Minor adjustments can sometimes significantly enhance users’ ability to find what they need.

2. People are misusing assets.

If people persistently misuse brand assets or licensed images despite your DAM system’s permissions being configured, consider these possibilities:

- Test the system permissions – are people able to access and download things they shouldn’t? If they can, update the user groups and/or system permissions.

- Clarify asset usage rights within the metadata if not already doing so. Lack of clear information may lead users to incorrect assumptions about permissible actions with the content.

- Are all assets stored in the DAM? It may be that people are getting access to content from file sharing tools (that could be outdated and/or lack metadata about usage rights). Sometimes enforcing compliance requires leadership intervention, including support with policies and/or sunsetting legacy systems.

3. People complain the DAM system is not easy to use.

DAM admins have likely configured the system as a great tool for themselves, but not for end users.

Look more closely at users’ needs and available system capabilities.

If the system was implemented without engaging users early in the process to understand their needs, configured to meet those needs, and/or it was not tested with users prior to launch, there may have been some missed opportunities.

It’s not too late to engage with your users to understand their complete workflows, identify their ideal processes, and see how the DAM can integrate into these.

At minimum, you can hopefully modify permissions and layouts to create a more streamlined user experience. It may be possible using the features of your digital asset management system to create tailored storefronts or portals for different user groups that greatly simplify their experience and only provide them the assets they need.

Addressing these findings might start with simple configuration adjustments, then proceed to leveraging existing out of the box integrations, then move to consideration of custom integrations.

4. The DAM system is a mess.

If your DAM has become a repository for indiscriminate file dumping, it’s time to establish governance.

Allowing digital asset creators to upload content without adherence to standards or quality checks can quickly lead to disorder. Each contributor ends up with their own little silo within the system, organizing, labeling, and describing things differently.

Start by collaborating with key stakeholders to define clear DAM roles and responsibilities. Designate a product owner to establish asset organization and description guidelines. Educate contributors on DAM best practices, highlighting the benefits to their work.

Enforce quality control through guidelines for metadata entry and use of controlled vocabularies, complemented by automated and manual reviews.

5. No one uses it.

If you build a DAM, will they come?

Not necessarily.

It’s often too easy for people to stick with old habits, even after the new system has been launched. If you have checked all of the items listed above and the new system is still not getting traction, look closely at how you are managing the transformation.

Successful adoption hinges on change management, requiring both top-down communication from leadership and bottom-up user enablement.

It is critical that leadership communicates the purpose behind the DAM system—what problem it solves, what benefits it will bring to the organization.

But understanding the broader impact isn’t enough. You need to bring users into the conversation so they can understand not just the benefits to the company, but to them personally. They will need to clearly understand the process changes asked of them and the reasons behind these changes. And they will require support as they adjust to new practices.

Still stuck?

It may be time to elevate your DAM solution. At AVP we offer a rapid diagnostic service to pinpoint actionable interventions for DAM success.

Or maybe it really is time to evaluate whether you need a new DAM system.

Either way, we can help. Schedule a call.

Holiday Card Crossword 2023

2 January 2024

Your DAM use cases are only as good as the humans involved

16 November 2023

Building a digital asset management (DAM) program involves many decisions—from selecting a system and configuring interfaces, to architecting workflows. To set your DAM up for success, it is critical to involve your users from the beginning so you can develop and crystalize DAM use cases that will guide decision-making and ease change management.

A common mistake is to neglect taking the time to truly learn about the users, what they need from a system, and what motivates them. Without involving users, you may arrive at a seemingly logical and technically correct solution, but users may not see its relevance.

This risks the DAM program’s future viability. Users are likely to abandon a system that introduces stumbling blocks. Without involving users, you also risk focusing on the wrong problem or building solutions that don’t address their needs.

Invite your users to help you determine the right problem to solve and what adds value to their work. Put humans at the center of your DAM use cases.

From Trail System to Information System

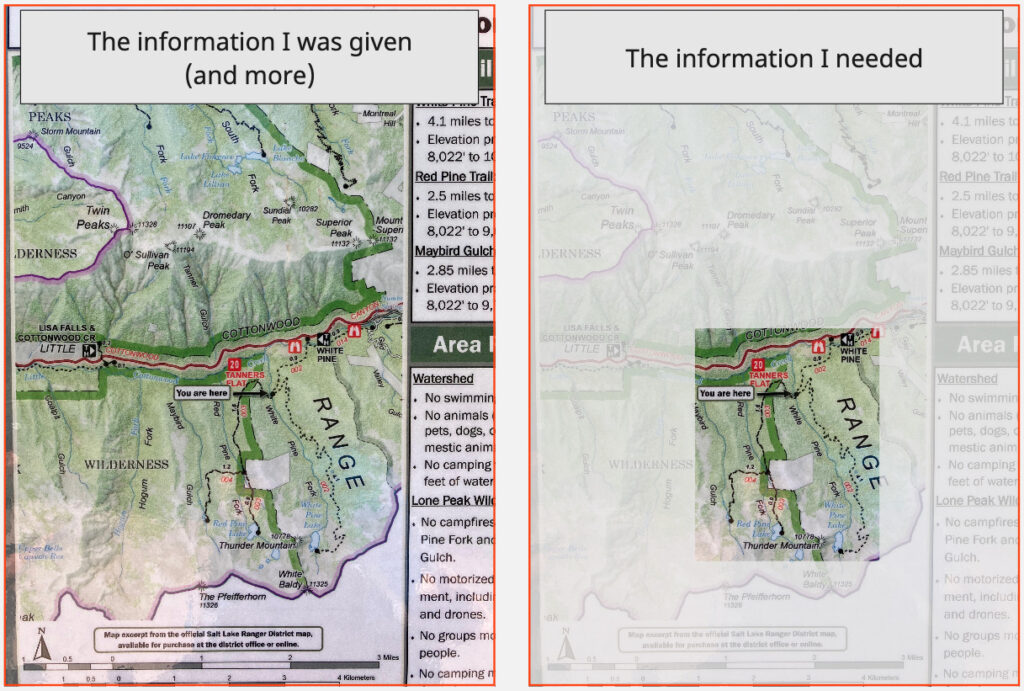

On a recent sunny Saturday, I stood at a fork on a hiking trail staring at a map mounted on a prominent bulletin board. What stood out first to me were the broad lines in purple and green. But they were not the footpaths I was looking for. On a closer look, I saw the trails were marked in skinny, black dotted lines in a much smaller section of the map. The highlighted lines were in fact official borders of the wilderness area.

It occurred to me that this map was made from the perspective of the Forest Service. Standing at this juncture under the hot sun, where I had the dirt paths, tree groves, and running creek in front of me, I did not care where the watershed boundary ended or where the research area began. I was looking only for where the trail splits on the map so I could know whether to go left or right.

Now, I am not fit to argue how the National Forest Service should display its trail maps—I am no cartographer (maybe in an alternative life I dream about!)—but I am a user of trails. This gives me a perspective on what information is useful to communicate to an average trail user. When it comes to the design of the system’s interactions with users, this perspective becomes relevant.

Just as hikers are not the Forest Service, your users are not you: while you have a view of a whole system, users interact with a specific slice of it. And they have a keen awareness of what makes it work well for them.

An information system, such as a digital asset management system, is like a trail system.

If you are managing an enterprise-wide information system, you have a design problem to solve. At the center of a design problem are humans—groups of users and stakeholders.

Their work, their needs, and their minds are inconsistent, ever-changing, hardly linear, and sometimes contradictory. The system is meant to help various teams to do their work, in a way that not only helps the individuals but the collective as a whole. Involve them in the process of developing DAM use cases; learn their perspectives and programmatically incorporate their feedback. Don’t rely on your own assumptions. Don’t create your system implementation in a vacuum.

What is human-centered design?

In the day-to-day work of managing a complex program and suite of technology, some problems grow amorphous. Things can feel messy. You are simultaneously supporting the teams who create content, those who contribute digital assets to the system, and those who need to quickly search and find items and the right information about them. There are stakeholders who want the system to save time, grow programs, provide accurate data, and apply governance and security policies.

Facing the enormity of these problems in a DAM program, human-centered design (HCD) provides a helpful framework. It is a concept increasingly applied in the design of intangible aspects of our world—digital spaces, services, interactions, and organizations.

Yet for systems like DAM—often used by staff internal to an organization—the practice is less commonly adopted. “Human-centered design” might sound like just a catchphrase, but it is defined by an ISO standard (ISO 9241-210:2019)! Officially, HCD is an “approach to systems design and development that aims to make interactive systems more usable by focusing on the use of the system and applying human factors/ergonomics and usability knowledge and techniques.”

In simple terms, it is about involving humans in both the process and the outcomes of the designing of solutions, using their specific needs relevant to the defined problem to guide the solution-seeking.

Let’s look at how the concept of human-centered design can be applied in a digital asset management program. (Or, really, any program managing an information system.)

But first, why does human-centered design matter?

Why engage users?

1. To validate the solution design

User feedback allows you to validate whether the enterprise technology meets the actual needs of its intended users.

2. To identify usability issues

User testing helps surface usability issues, bottlenecks, and pain points that might not be apparent during internal development and testing.

3. To reduce risk

Testing with actual users and gathering user feedback along the way allows for iterative improvement. This helps reduce the likelihood of costly setbacks after implementation and lack of trust among users.

4. To enable change management and improve user adoption

When users feel their feedback is valued and incorporated into the technology, they are more likely to adopt it enthusiastically and become advocates for its widespread use within the organization.

5. To facilitate continuous improvement and scalability

Regularly seeking user input allows the enterprise technology to stay relevant to evolving user needs and changing business requirements.

Applying human-centered design to solve common digital asset management problems

Here are some common problems organizations encounter with their digital asset management strategies, and how developing DAM use cases with human-centered design can help solve them:

No central repository

Collection items, files, or content are in disparate places, organized in a way that makes sense to only a select few, and are artifacts of an evolving team.

To start, learn about the user’s scope of content and their mental model for organizing and searching digital assets. Determine whether a DAM or central repository is needed and viable for the organization. Further define what constitutes digital assets and who the users are in this context. Define the requirements of such a system in the form of user stories from the human’s perspective prior to shopping for technology products and making a selection decision.

“Where is that photo I’ve seen before?”

Users frequently cannot find the digital assets (or do so quickly) or have trouble navigating the site.

To start, investigate what the root problem might be and what problem you want to solve. Learn from the users—through interviews, observations, and testing—what they are struggling with. Is this an issue with the layout of the interface? Or is this an issue with the metadata of the digital assets?

Misuse or confusion on sharable content

The collection needs guardrails and governance to help users avoid mistakenly sharing or misusing content.

To start, define the problem to tackle. Gather information on current constraints such as workflow schedules. If the problem is preventative, programmatically plan out the appropriate access and labeling of content. Configure business logics that conform to user needs and DAM use cases. If the problem has to do with users’ understanding of the content, conduct user research to learn characteristics of the metadata attributes important to the users whose problem you aim to address.

Onboard more teams

The DAM system was originally launched with one team based on how they organize digital assets and campaigns; now it is time to onboard yet another team that creates a new type of assets. Each team has its unique ways of accessing and organizing assets and its own metadata requirements that govern its workflows.

To start, learn about the differences between various teams, how they organize their content, and the workflows they have for creation, ingest, and/or publishing. Extract user stories and generalize representative functional requirements. Use the requirements as benchmarks, not a checklist, for satisfying various user needs.

Tips on considering users in DAM use cases

There are different ways to think about users in a DAM program. When you sit alongside users to learn about their day-to-day workflows and their stumbling blocks, you are zoomed in. You are borrowing your users’ lenses and viewing the problem through their perspectives. When you return to your desk and consider how a need can be met by the system’s capabilities, you take on a broad perspective. It is then important to make design decisions that are relevant to multiple groups, consistent across the system, and maintainable over time.

Tip #1 You are not your users and stakeholders

Without building DAM use cases and user stories based on real humans, you run the risk of imagining solutions based on your and your team’s own assumptions and preferences. You end up designing a solution that makes sense according to your own (and let’s be honest, biased) perspective. What flows logically to you might become an obstacle to a different group. And you are left scratching your head wondering why users get so confused by a certain step.

Tip #2 Zoom out, and bring the alignment

Your solutions should be programmatically applied and create consistency. The idea is not to make a one-to-one replication of what one user or one group may say they want. Rather, focus on what they need to accomplish. If they want a button because they need to quickly press it every time to complete a repeating task, why not design a solution that batches the step and eliminates the repeating step?

A lot of times, users are too close to the system. For user testing and research to be effective, it is important to ask the right questions. Then, it is up to the researcher and the manager of a DAM program to bring the elements together in the full picture.

Tip #3 Investigate the problem

It is important to begin learning about the problem space by asking questions. Investigate the original problem that initiated this project. Almost always, you would need to investigate and redefine the problem.

Talking to users, you might learn that users are frustrated with the workflow, that the content team thinks of their work in categories contradictory to how they are arranged in the DAM, or that there is a technical flaw that causes access barriers. The first is a finding on someone’s attitude, the second a functional requirement, and the third a system oversight or bug. All of these factors contribute to the problem you are trying to solve.

Some of these ideas require further user research; some may not be true solutions but rather bandaids; some may take a much longer timeline or a bigger budget. There are constraints that every design must work within.

Carefully defined DAM use cases and user needs help determine which solution to pursue. Without taking the time to learn about the content team, how they interact with the digital asset management system, and how other teams search for the content they contributed, it won’t be clear what solution gets to the root of the problem.

Summary

A human-centered approach to managing your digital asset management program helps you ensure you are focusing on the right problem. It helps you build the DAM use cases, distilling the needs you aim to satisfy.

It helps reduce risks by involving users in an iterative process, gathering information, and creating a feedback loop. Involving users in your process also helps to build trust with stakeholders. Prepare users for the transition in DAM as the program grows, introduces new technologies, or onboard new teams. Finally, be sensitive to the human context. Exercise humility, and check your biases and assumptions.

Human-centered design at AVP

At AVP, we apply human-centered design to help solve a variety of information problems. Some examples:

- To guide how collections of massive textual data may implement AI-powered metadata enrichment processes in ways that are useful and ethical, AVP provided a prototype for structuring annotation crowdsourcing and involving various types of users in the process.

- To help program managers determine how technologies should be supported and prioritized, AVP conducted user research and delivered quantitative and qualitative data showing how successfully team members were using the myriad tools.

- An organization needed evidence to support a decision on the future development of a software application. AVP combined technical analysis with qualitative user research that considered human factors—such as technical proficiency and individual motivations—to bolster the recommended decision.

Creating a successful DAM RFP

10 November 2023

In the world of digital asset management (DAM) system selection, requests for proposals (RFP) are ubiquitous. This is for good reason. A strong RFP includes a user-centered approach outlining priorities, usage scenarios, and requirements. It also provides vendors with an explanation of and context for technology needs, and clear instructions for their proposals. The RFP brings all of the details together in a way for organizations to perform apples-to-apples comparisons of vendor proposals.

In this post, we provide everything you need to get started on your RFP journey. You’ll learn what is unique about DAM RFPs, how to structure your RFP, and questions to ask vendors. Follow along on our downloadable DAM RFP checklist.

What is an RFP?

A Request for Proposal (RFP) is a business document, sometimes managed by a procurement or purchasing office (and sometimes not). RFPs announce an organization’s need for a new technology, detail the requirements for that technology, define its purpose, and solicit bids for the financial commitment for purchase.

RFPs allow qualified vendors to showcase their technology solutions and demonstrate how they align with those requirements. They act as a gateway for vendors to promote their expertise, capabilities, and innovative technologies to meet the needs of the organization.

It is important to note that RFPs are not mandatory in all contexts. However, they are commonly used in government settings to counteract favoritism, prejudice, and nepotism. RFPs level the playing field. They ensure that vendors are evaluated solely based on the quality of their proposals and the cost of investment. This approach promotes fairness and impartiality, allowing all vendors to compete on an equal footing. By eliminating biases and providing a transparent evaluation process, RFPs enable organizations to make informed decisions that prioritize the best interests of their stakeholders.

How do I create an RFP?

The good news is there are lots of examples of RFPs on the web. And, if you have a procurement office, you can always reach out to get examples of how your organization creates them.

That said, the examples you’ll find online are often generic, not specific to DAM selection. While generic RFP templates can be helpful starting points, they do not always provide insights into how to gather the information to complete the RFP, including business objectives, functional, technical, and format requirements, usage scenarios, and user profiles. All of these are necessary to provide vendors with a comprehensive understanding of your organization’s needs for new DAM technology.

What is unique about DAM RFPs?

Before we jump into the checklists, let’s take a moment to review what makes a DAM RFP unique. First, DAM selection projects will have many internal and external stakeholders. From marketing to creatives and archivists and their constituents, there are many perspectives to represent. It is key, then, that time is spent understanding their broad set of needs, through interviews, surveys, and focus groups.

The digital asset management market offers a multitude of options, with numerous systems available at varying price points and complexities. For organizations unfamiliar with the wide range of choices, sifting through these options can be a challenging endeavor, especially given the intricate nature of DAM systems. These systems often share common functionalities but also possess distinctive features that set them apart.

An RFP can provide your organization with a side-by-side comparison of the vendor proposals. This includes a qualitative comparison, but if done correctly, can offer a quantitative assessment, as well.

Factors to consider in DAM procurement

- Content-centric approach: DAMs focus on the challenges of organizing, managing, and distributing digital assets. By focusing on the content itself, DAM systems enhance the accessibility, searchability, and utilization of digital assets. DAMs make it easier for users to find and work with the specific content they need.

- Emphasis on metadata and taxonomy: A successful DAM will enable effective search, discovery, and retrieval of digital assets. It will categorize and describe assets with rich metadata and a structured taxonomy. This ensures users can quickly locate and make sense of their content.

- Integration: DAMs are rarely standalone systems. They often integrate with content management systems (CMS), creative software (think Adobe products), e-commerce platforms, rights management systems, or workflow applications.

- UX and collaboration: Digital Asset Management systems (DAMs) play a pivotal role in facilitating the collaborative efforts of diverse teams and stakeholders both within and outside an organization. This includes enterprise-level DAMs, which may extend across international borders, necessitating support for multiple languages. As such, these systems should offer user-friendly interfaces and accessibility to accommodate the varied needs of their users.

- Permissions and security: Digital assets often have distinct rights that require strict security measures to regulate access and downloads. A robust DAM system safeguards digital content, ensuring that user permissions are securely managed to maintain data integrity and privacy.

- Scalability and performance: When dealing with quickly growing digital collections, the efficiency of your DAM system becomes crucial. It needs to handle growth in the volume of files and accommodate the evolving needs of users without compromising speed and responsiveness. The choice of storage providers and methodologies significantly influences the system’s scalability, ensuring it can seamlessly adapt to increasing demands.

- Vendor expertise and support: Considering a vendor’s track record is an important component of the decision-making process. Hearing from current clients, and investigating reputation, customer support options (often defined in a Service Level Agreement, or SLA), training offerings, and ongoing product development are critical in identifying if their DAM solution is the right one for your organization.

Preparation

Before diving into RFP drafting, first take a step back and think about the complete RFP process. Start by gathering comprehensive requirements to clarify and document your organization’s needs. Establish a clear timeline, complete with milestones and deadlines that include the drafting phase. Finally, begin identifying and researching potential vendors — you might want to adjust your RFP based on what you find.

Administrative Tasks

To simplify your RFP process, begin by checking if your organization already has an appropriate digital asset management system in place or conduct a discovery process (as detailed in the section below). Secure written approval, establish a budget, put together a timeline, and consult with your procurement department to review RFPs and purchasing regulations.

The timeline should cover all essential phases, including RFP creation, distribution, vendor demonstrations, evaluation, and the often-lengthy procurement phase. Practical considerations such as staff vacations and holidays should also be accounted for to mitigate potential disruptions. By addressing these elements, the timeline becomes a comprehensive and practical plan for the entire RFP and DAM selection process, reducing the risk of unforeseen delays.

Finally, consider hiring an experienced DAM sourcing consultant who can leverage their expertise and knowledge of the marketplace to match your organization with the most suitable system for your users.

Discovery

In the process of DAM selection, the discovery phase involves a comprehensive investigation of the organization’s digital asset management needs. This typically starts with in-depth discussions with stakeholders and decision-makers. These conversations help identify specific requirements, challenges, and objectives to manage digital assets.

The discovery process often includes a thorough examination of the organization’s current workflows, analysis of the volume and types of digital assets, and an evaluation of the existing systems and technology infrastructure. Data collection methods such as surveys and data analysis may also gather information on user expectations, content lifecycle, access requirements, metadata needs, user permissions, integration considerations, and long-term preservation strategies.

The active engagement of key stakeholders and thorough review of pertinent in-house documentation are pivotal aspects of the discovery process.

Following the completion of the discovery phase, you should be able to fine-tune the problem statement, establish measurable objectives, and rank your functional and non-functional requirements. At this stage, broaden your vendor research efforts by attending industry events such as Henry Stewart DAM, and by exploring resources like online vendor directories and seeking recommendations from peers and professional networks.

Once you have a feel for vendors, try to narrow down your vendor list to just a handful for the most effective evaluation. A shorter list makes managing internal resources easier, allowing for a meaningful comparison of proposals and identification of strengths and weaknesses. With well-structured discovery and vendor selection processes, your DAM journey is off to a promising start!

RFP Structure

If you have a procurement department, it is likely they have an RFP format you must follow. In that case, consider how you can fit the following information into the existing structure. For those organizations that do not have an internal RFP format, use the following structure:

Overview

This is the initial point of contact and sets the tone for the entire RFP. Start with a concise introduction to your organization, capturing its essence in just a few sentences. Next, provide a brief background on the DAM selection project, highlighting the driving factors and context behind the need for a DAM solution.

A well-crafted problem statement is vital to ensure that vendors understand the challenges you face and the specific pain points you aim to address. Clearly articulate your business objectives, outlining the goals and outcomes you hope to achieve through the implementation of the DAM.

The overview document should include key details such as the current number of digital assets, their size in terabytes (or gigabytes or petabytes), and the primary formats you work with. If possible, provide a growth estimate in percentages, e.g., year-by-year growth of 10%. These specifics will help vendors tailor their solutions to meet your unique needs.

The overview also serves as a guide to vendors on how to navigate the RFP process. Include a timeline with key dates such as the RFP issue date, the deadline for vendor questions, when your organization will respond to questions, when vendors are required to confirm their intent to submit proposals, and the proposal submission deadline. Also, mention the subsequent steps, such as the notification of selected offerors for potential demonstrations and presentations, and the final selection process.

Make sure to specify the preferred delivery format and method for proposals and the required deliverables. Additionally, provide an overview of the evaluation criteria and scoring process that will be used to assess the proposals.

Include relevant contact information for any inquiries, and consider including a glossary of terms specific to DAM and the RFP. Clarify aspects like incurred costs to vendors, retention of submitted documentation, external partnerships, market references, and the importance of confidentiality and non-disclosure agreements (NDA).

Requirements Spreadsheet

In the process of crafting your DAM RFP, it is essential to establish a well-organized structure for your requirements. To begin, let’s define some key terms.

Functional requirements refer to specific capabilities or features that the digital asset management system must possess to meet your organization’s needs. These requirements can be structured as user stories, framing them in the context of “As an X, I need to Y, so that Z,” to clearly define who needs the functionality, what they need, and why. These functional requirements are essentially the building blocks that shape how the DAM system will operate, focusing on the user experience and the desired outcomes.

On the other hand, nonfunctional or technical requirements relate to the broader technical aspects that the DAM system should meet. These may include performance, security, scalability, and other technical considerations that are essential for the system’s effective operation. Additionally, format requirements specify the primary file formats and expectations for managing digital assets within the DAM. These include image formats (.jpg), videos (.mp4), documents (.pdf), and other file formats (e.g., Adobe and Microsoft file formats). They outline how the digital asset management system should handle and support these formats.

For further clarity, identify stakeholders and categorize them into three main types: DAM Administrators, Content Creators, and End Users. Defining their roles and capabilities, and noting the number of each, is particularly valuable for vendors, especially those who charge based on the number of user seats.

This structured approach not only helps DAM vendors understand your needs, it enables them to provide comprehensive and customized responses to your RFP. A helpful tool for organizing this content is a simple spreadsheet with distinct tabs for each requirement category. This provides a clear distinction between functional, nonfunctional/technical, and format requirements.

Usage Scenarios

Usage scenarios are your secret weapon! They prioritize the user and bring your requirements to life.

A usage scenario, sometimes referred to as a “use case,” is a detailed narrative describing how a system or product is used in a specific real-world context. These scenarios provide a human-readable representation of functional requirements, offering a comprehensive view of how the system behaves and responds within different situations. Use cases help stakeholders and vendors, including both technical and non-technical individuals, to grasp how the system’s features and functionalities align with practical user needs and operational processes.

We highly recommend including three to seven usage scenarios in your RFP. If you have more, consider combining and prioritizing them. Each usage scenario should have a brief title, an objective that explains its purpose, and actors identified from your User Descriptions in the Requirements Spreadsheet. Provide background context and describe the main steps or interactions that actors will perform in the future system. Remember to allow flexibility for different solutions to the same problem. Usage scenarios are the heart of your RFP, so craft them to effectively convey your requirements.

Vendor Questionnaire

As you delve deeper into crafting your RFP, don’t overlook the significance of your vendor questionnaire. The vendor questionnaire is a comprehensive list of questions that go beyond requirements and use cases, focusing on higher-level aspects of the DAM vendor company, their implementation and support procedures, and proposal costs, providing valuable insights into their capabilities and suitability for the project.

The questionnaire serves as a vital component of your DAM RFP. It gathers in-depth information essential for the side-by-side evaluation of different systems. Number the questionnaire so that vendors can easily refer directly to the questions in their proposal.

A vendor questionnaire should cover general company information, product details, technical support, and references from comparable organizations. It should also include specific questions about the costs associated with the system, including license fees, implementation costs, and support expenses.

Conclusion

The Request for Proposal is a key component of the DAMS procurement process. RFPs provide structured and transparent frameworks for evaluating and selecting a new DAM system. They enable a fair and consistent evaluation process by clearly defining requirements and usage scenarios, evaluation criteria, and submission guidelines. This allows organizations to compare proposals from multiple DAM vendors objectively, ensuring that the selected vendor best aligns with their needs and objectives. RFPs help mitigate risks by providing a systematic approach to DAM vendor selection, fostering accountability, and minimizing subjective decision-making.

Are you ready to embark on your own DAM RFP process? We’ve got you covered! [Click here] to download our comprehensive DAM RFP checklists.

These valuable resources will guide you through planning, development, and distribution of your RFP, ensuring you achieve the best possible outcome. Don’t miss out on this essential tool to streamline your RFP journey.

Getting Started with AI for Digital Asset Management & Digital Collections

13 October 2023

Talk of artificial intelligence (AI), machine learning (ML), and large language models (LLMs) is everywhere these days. With the increasing availability and decreasing cost of high-performance AI technologies, you may be wondering how you could apply AI to your digital assets or digital collections to help enhance their discoverability and utility.

Maybe you work in a library and wonder whether AI could help catalog collections. Or you manage a large marketing DAM and wonder how AI could help tag your stock images for better discovery. Maybe you have started to dabble with AI tools, but aren’t sure how to evaluate their performance.

Or maybe you have no idea where to even begin.

In this post, we discuss what artificial intelligence can do for libraries, museums, archives, company DAMs, or any other organization with digital assets to manage, and how to assess and select tools that will meet your needs.

Examples of AI in Libraries and Digital Asset Management

Wherever you are in the process of learning about AI tools, you’re not alone. We’ve seen many organizations beginning to experiment with AI and machine learning to enrich their digital asset collections. We helped the Library of Congress explore ways of combining AI with crowdsourcing to extract structured data from the content of digitized historical documents. We also worked with Indiana University to develop an extensible platform for applying AI tools, like speech-to-text transcription, to audiovisual materials in order to improve discoverability.

What kinds of tasks can AI do with my digital assets?

Which AI methods work for digital assets or collections depends largely on the type of asset. Text-based, still image, audio, and video assets all have different techniques available to them. This section highlights the most popular machine learning-based methods for working with different types of digital material. This will help you determine which artificial intelligence tasks are relevant to your collections before diving deep into specific tools.

AI for processing text – Natural Language Processing

Most AI tools that work with text fall under the umbrella of Natural Language Processing (NLP). NLP encompasses many different tasks, including:

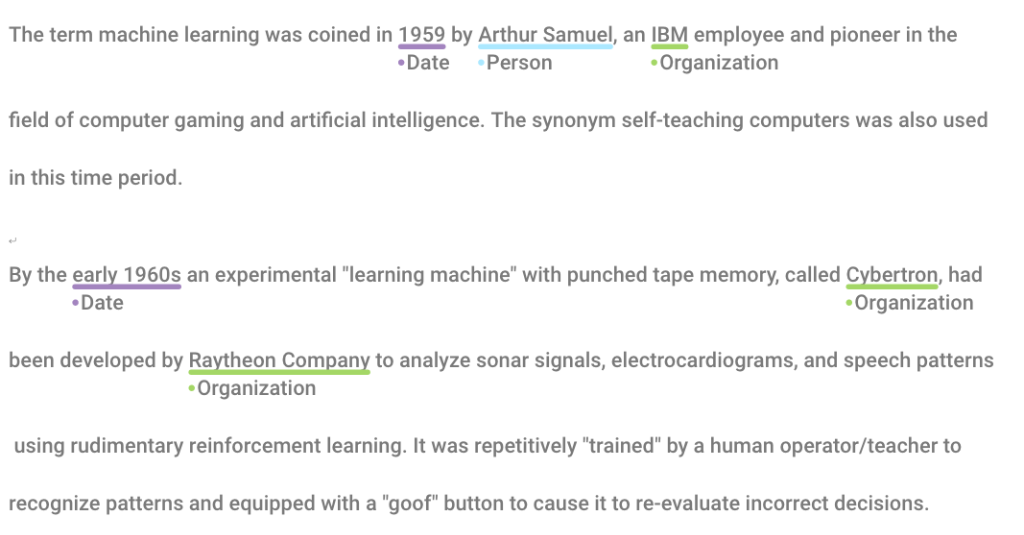

- Named-Entity Recognition (NER) – NER is the process of identifying significant categories of things (“entities”) named in text. Usually these categories include people, places, organizations, and dates, but might also include nationalities, monetary values, times, or other concepts. Libraries or digital asset management systems can use named-entity recognition to aid cataloging and search.

- Sentiment analysis – Sentiment analysis is the automatic determination of the emotional valence (“sentiment”) of text. For example, determining whether a product review is positive, negative, or neutral.

- Topic modeling – Topic modeling is a way of determining what general topic(s) the text is discussing. The primary topics are determined by clustering words related to the same subjects and observing their relative frequencies. Topic modeling can be used in DAM systems to determine tags for assets. It could also be used in library catalogs to determine subject headings.

- Machine translation – Machine translation is the automated translation of text from one language to another–think Google Translate!

- Language detection – Language detection is about determining what language or languages are present in a text.

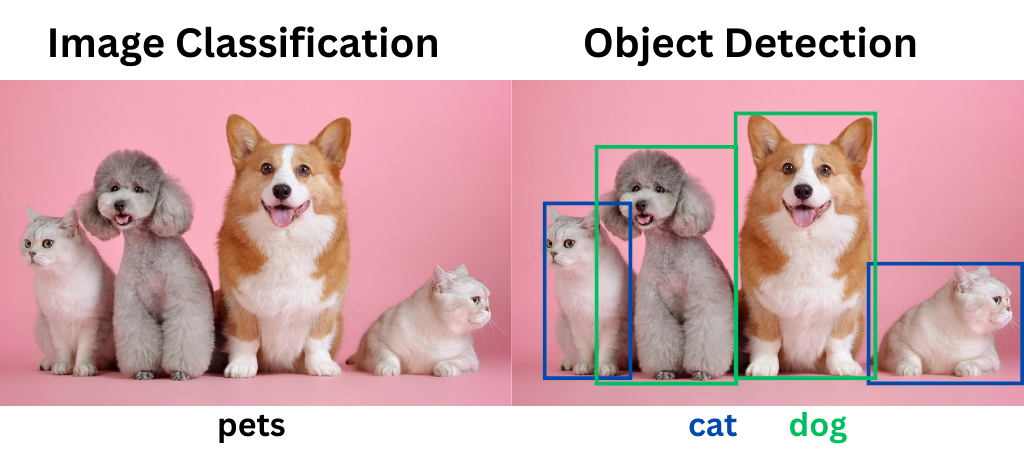

AI for processing images and video – Computer Vision

Using AI for images and videos involves a subfield of artificial intelligence called Computer Vision. Many more tools are available for working with still images than with video. However, the methods used for images can often be adapted to work with video as well. AI tasks that are most useful for managing collections of digital image and video assets include:

- Image classification – Image classification applies labels to images based on their contents. For example, image classification tools will label a picture of a dog with “dog.”

- Object detection – Object detection goes one step further than image classification. It both locates and labels particular objects in an image. For example, a model trained to detect dogs could locate a dog in a photo full of other animals. Object detection is also sometimes referred to as image recognition.

- Face detection/face recognition – Face detection models can tell whether a human face is present in an image or not. Face recognition goes a step further and identifies whether the face is someone it knows.

- Optical Character Recognition (OCR) – OCR is the process of extracting machine-readable text from an image. Imagine the difference between having a Word document and a picture of a printed document–in the latter, you can’t copy/paste or edit the text. OCR turns pictures of text into digital text.

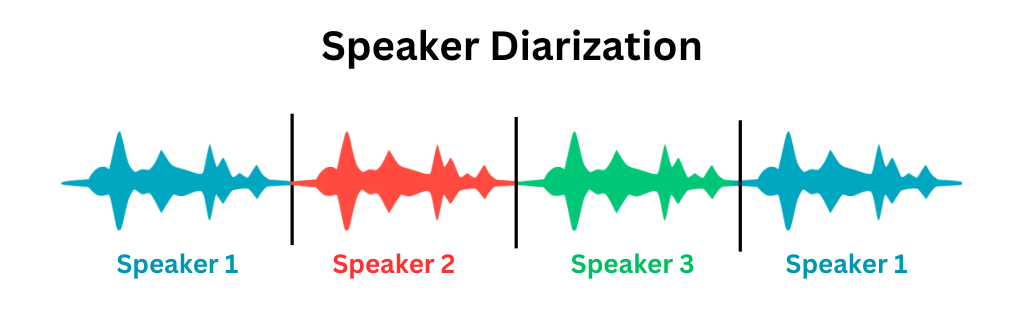

AI for processing audio – Machine Listening

AI tools for working with audio are much fewer and farther between. The time-based nature of audio, as opposed to more static images and text, makes working with audio a bit more difficult. But there are still methods available!

- Speech-to-text (STT) – Speech-to-text, also called automatic speech recognition, transcribes speech into text. STT is used in applications like automatic caption generation and dictation. Transcripts created with speech-to-text can be sent through text-based processing workflows (like sentiment analysis) for further enrichment.

- Music/speech detection – Speech, music, silence, applause, and other kinds of content detection can tell you which sounds occur at which timestamps in an audio clip.

- Speaker identification / diarization – Speaker identification or diarization is the process of identifying the unique speakers in a piece of audio. For example, in a clip of an interview, speaker diarization tools would identify the interviewer and the interviewee as speakers. It would also tell you where in the audio each speaks.

What is AI training, and do I need to do it?

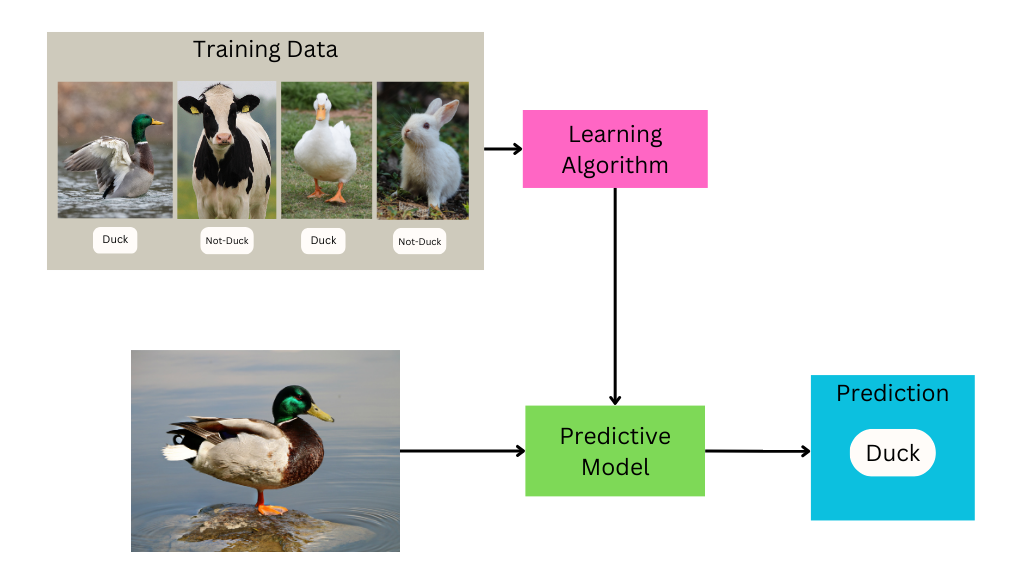

Training is the process of “teaching” an algorithm how to perform its task.

Creating a trained machine learning model involves developing a set of training data, putting that data through a learning algorithm, and tweaking parameters until it produces desirable results.

You can think of training data as the “answer key” to a test you want the computer to take.

For example, if the task you want to perform is image classification–dividing images into different categories based on their contents–the training data will consist of images labeled with their appropriate category. During the training process, the computer examines that training data to determine what features are likely to be found in which categories, and subsequently uses that information to make guesses about the appropriate label for images it’s never seen before.

In addition to the labeled data given to the algorithm for learning, some data has to be held back to evaluate the performance of the model. This is sometimes called “ground truth” testing, which we’ll discuss more below.

Developing training and testing data is often the most time-consuming and labor-intensive part of working with machine learning tools. Getting accurate results often requires thousands of sample inputs, which may (depending on the starting state of your data) need to be manually processed by humans before they can be used for training.

Training AI tools sounds costly, is it always necessary?

Custom training may not be required in all cases. Many tools come with “pre-trained” models you can use. Before investing loads of resources into custom training, determine whether these out-of-the-box options meet your quality standards.

Keep in mind that all machine learning models are trained on some particular set of data.

The data used for training will impact which types of data the model is well-suited for—for example, a speech-to-text model trained on American English may struggle to accurately transcribe British English, and will be completely useless at transcribing French.

Researching the data used to train out-of-the-box models, and determining its similarity to your data can help set your expectations for the tool’s performance.

Choose the right AI tool for your use case

Before you embark on any AI project, it’s important to articulate the problem you want to solve and consider the users that this AI solution will serve. Clearly defining your purpose will help you assess the risks involved with the AI, help you measure the success of the tools you use, and help you determine the best way to present or make use of the results for your users in your access system.

All AI tools are trained on a limited set of content for a specific use case, which may or may not match your own. Even “general purpose” AI tools may not produce results at the right level of specificity for your purpose. Be cautious of accuracy benchmarks provided by AI services, especially if there is little information on the testing process.

The best way to determine if an AI tool will be a good fit for your use case is to test it yourself on your own digital collections.

How to evaluate AI tools

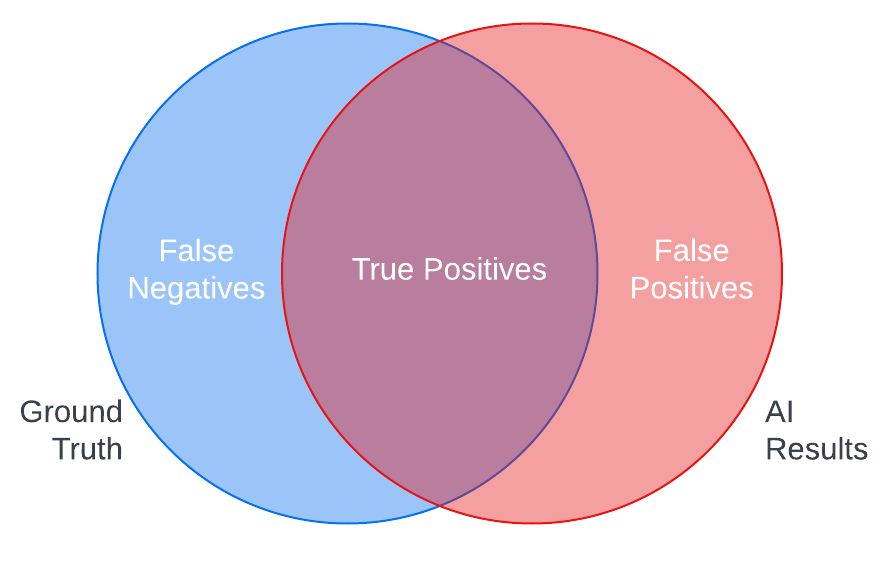

Ground truth testing is a standard method for testing AI tools. In ground truth testing, you create examples of the ideal AI output (ground truth) for samples of your content and check them against the actual output of the AI to measure the tool’s accuracy.

For instance, comparing the results of an object recognition tool against the list of objects you expect the tool to recognize in a sample of images in your digital asset management system can show you the strengths of the AI in correctly identifying objects in your assets (true positives) and its weaknesses in either not detecting objects it should have (false negatives) or misidentifying objects (false positives).

Common quantitative measures for ground truth testing include precision and recall, which can help you better calculate these risks of omission and misidentification. You can also examine these errors qualitatively to better understand the nature of the mistakes an AI tool might make with your content, so you can make informed decisions about what kind of quality control you may need to apply or if you want to use the tool at all.

Ground truth testing, however, can be costly to implement.

Creating ground truth samples is time-consuming, and the process of calculating comparison metrics requires specialized knowledge. It’s also important to keep in mind that ground truth can be subjective, depending on the type of tool—the results you’d expect to see may differ in granularity or terminology from the outputs the AI was trained to produce.

In the absence of ground truth, you can visually scan results for false positives and false negatives to get a sense of what kinds of errors an AI might make on your content and how they might impact your users.

Is it important that the AI finds all of the correct results? How dangerous are false positives to the user experience?

Seeing how AI results align with your answers to questions like these can help to quickly decide whether an AI tool is worth pursuing more seriously.

In addition to the quality of results, it is also important to consider other criteria when evaluating AI tools. What are the costs of the tool, both paid services and staff time needed to implement the tool and review or correct results? Will you need to train or fine-tune the AI to meet the needs of your use case? How will the AI integrate with your existing technical infrastructure?

To learn more about how you can evaluate AI tools for your digital assets with users in mind, check out AVP’s Human-Centered Evaluation Framework webinar, which includes a quick reference guide to these and many other questions to ask vendors or your implementation team.

When not to use artificial intelligence

With all of the potential for error, how can you decide if AI is really worth it? Articulating your goals and expectations for AI at the start of your explorations can help you assess the value of the AI tools you test.

Do you want AI to replace the work of humans or to enhance it by adding value that humans cannot or do not have the time to do? What is your threshold for error? Will a hybrid human and AI process be more efficient or help relieve the tedium for human workers? What are the costs of integrating AI into your existing workflows and are they outweighed by the benefits the AI will bring?

If your ground truth tests show that commercial AI tools are not quite accurate enough to be worth the trouble, consider testing again with the same data in 6 months or a year to see if the tools have improved. It’s also important to consider that tools may change in a way that erodes accuracy for your use case. For that reason, it’s a good idea to regularly test commercial AI tools against your baseline ground truth test scores to ensure that AI outputs continue to meet your standards.

Now what?

The topics we’ve covered in this post are only the beginning! Now that you’ve upped your AI literacy and have a basic handle on how AI might be useful for enhancing your digital assets or collections, start putting these ideas into action.

Learn how AVP can help with your AI selection or evaluation project

Preserving Digital Assets: A Gap in the DAM Marketplace

17 August 2023

Cultural heritage organizations increasingly seek out a digital asset management system (DAM) that integrates robust digital preservation capabilities for preserving digital assets. They often recognize the importance of investing in digital preservation but struggle with the challenge of maintaining separate DAM and digital preservation systems due to limited resources.

While DAM systems typically prioritize security, permissions, and utilize cloud storage—all found in digital preservation systems as well—they still lack the comprehensive functionality that cultural heritage organizations and others consistently seek to help with preserving digital assets.

Despite the maturity of the DAM market, there remains a persistent gap between the preservation functionality that cultural heritage organizations desire and the systems currently available.

At AVP, we have witnessed this shift in what organizations are seeking first-hand through our work assisting organizations in finding the perfect technology solutions to meet their unique requirements, from digital asset management and media asset management (MAM) to digital preservation systems and records management systems.

In light of this issue, I would like to delve into the reasons behind this disparity and share AVP’s recommendations on how organizations can navigate the technical landscape for preserving digital assets effectively. Let’s explore the evolving needs of organizations and uncover strategies for achieving their goals within the realm of digital asset management and digital preservation.

Why can’t Digital Asset Management just “do Digital Preservation”?

It is crucial to grasp the fundamental differences between these two types of systems and their respective functionalities.

According to IBM, a DAM is “a comprehensive solution that streamlines the storage, organization, management, retrieval, and distribution of an organization’s digital assets.”

The lending library

To paint a visual picture, envision a DAM as a lending library.

Just like books neatly arranged on shelves, digital assets are meticulously organized, described, and managed within the DAM. Library users can navigate the catalog using various criteria such as subject, author, or date to locate specific assets, just as they can in the DAM. And, similar to needing a library card to borrow books, access to the DAM requires registered users to have appropriate permissions to access and utilize the digital assets.

Essentially, a well-managed DAM ensures that your digital assets are securely stored, easily searchable, and readily accessible. It functions as a virtual library, providing efficient organization and control over your organization’s valuable digital resources.

The offsite storage

Building upon the library analogy, let’s delve into the unique characteristics of a digital preservation system.

Imagine the library books that are not frequently accessed. Instead of occupying valuable space on the main shelves, they are often relocated to a secure, climate-controlled warehouse. These books are packed in containers on tall shelving units, accessible to only a select few individuals. Browsing becomes nearly impossible, searching becomes challenging, and obtaining one of these books typically requires assistance from a librarian.

In the digital realm, a digital preservation system serves as the digital counterpart to this offsite storage. It replaces physical locked warehouses with secure user permissions, ensures file verification and fixity testing to maintain data integrity, employs packaging mechanisms called “bags,” and utilizes cold data storage for long-term preservation.

While a digital preservation system focuses primarily on safeguarding and preserving digital assets, it also prioritizes security and protection over immediate accessibility.

Same-same but different?

From these descriptions, it is evident that the fundamental purposes of DAM and digital preservation systems are significantly different, although there are areas of overlap. For instance, both the library and warehouse prioritize secure storage of their respective materials. (Ever walked out of a library without checking out your book only to set the alarm off?)

Likewise, both DAM and digital preservation systems maintain strong user permissions to ensure security. Similarly, while libraries may employ climate control measures — albeit less stringent than those governing the warehouse’s temperature and humidity levels — some DAMs may also implement “lightweight” functionality for preserving digital assets, such as fixity testing upon upload.

This distinction emphasizes the intrinsically divergent purposes of DAM and digital preservation system.

DAMs primarily excel in efficient asset management and user accessibility, allowing organizations to easily organize, retrieve, and distribute their digital assets. On the other hand, digital preservation system places paramount importance on long-term preservation and data integrity, safeguarding valuable assets for future generations.

How can I use a DAM system for preserving digital assets today?

Increasingly, DAM vendors are adding digital preservation functionality to their systems. At a minimum, most DAM systems perform:

- Checksum hash values (e.g., MD5) creation on ingest

- Event logging (whenever an action is taken on a file)

Some DAM systems can also do the following:

- Virus checking on ingest

- Hybrid (tiered) storage (a combination of hot and cold storage or online, nearline, and offline storage)

Only a very small number of DAM systems may also:

- Make checksum values visible to users

- Test existing checksum values on ingest

- Enable manual and/or regular fixity testing

- Run reports on or export event logs

And at the time of writing, no DAM performs automated obsolescence monitoring of file formats (to our knowledge).

With this in mind, the question to consider is: what’s good enough when it comes to digital preservation functionality in DAMS?

“Good enough” digital preservation

The concept of “Good enough” digital preservation has been circulating since at least 2014, thanks to groups like Digital POWRR. Essentially, it recognizes that not everyone can achieve or maintain the highest levels of digital preservation, such those defined by level four of the NDSA Levels of Digital Preservation or full conformance with ISO 16363 (Audit and certification of trustworthy digital repositories), for all digital assets (for all eternity).

For many, these guidelines can feel overwhelming and unattainable. When organizations search for a DAM solution, they often have an expectation that it will solve all digital preservation planning challenges and result in a perfect A+ in digital preservation. However, as we have come to realize, this expectation is not in line with reality.

So, what should you do?

Let’s dive into some ideas on how we can tackle these issues.

Understand the difference between DAM system and Digital Preservation system functionality

First and foremost, organizations should focus on developing a clear understanding of the distinctions between a DAM and a digital preservation system. This knowledge forms the foundation for informed decision-making and empowers organizations to choose the right path.

Clarify your appetite for risk

Next, organizations need to assess their risk comfort levels. What functionalities are essential for their peace of mind? Are there specific data management or digital preservation regulations they must comply with? Can a DAM system meet these requirements effectively? If not, organizations must determine the functionalities that take precedence and decide whether a DAM or digital preservation system is more suitable for their needs.

DAM vendors play a crucial role in this process. It is essential for them to familiarize themselves with basic digital preservation software functionality. This understanding enables them to respond effectively to client requirements and deliver solutions that align with their specific needs.

Request standards compliance

DAM vendors should actively consider aligning with some guidelines from the NDSA Levels of Digital Preservation, for example. By doing so, vendors not only benefit clients with a need for digital preservation but also contribute to the long-term accessibility of assets within the DAM for all users. This alignment has the potential to promote industry-wide best practices and ensures the preservation and availability of digital assets beyond individual client needs.

However, it is essential to recognize that not all DAM systems need to encompass complete digital preservation functionality.

The reality is, some organizations heavily invested in digital preservation may have a particularly low risk tolerance for loss and, despite DAM’s other capabilities, may choose not to depend on it alone to achieve their preservation objectives.

Choosing a solution for preserving digital assets

In light of these considerations, it is crucial for organizations to engage in internal discussions to determine their specific needs and priorities. These conversations should address risk levels and the functionalities that are essential for their peace of mind and compliance with their data management requirements.

By having these dialogues, organizations can collectively define an acceptable level of preservation within the realm of DAM. Although reaching a consensus may present challenges, the goal is to find a comfortable middle ground that satisfies the needs of everyone in the organization. This process not only addresses their requirements effectively but also has the potential to drive innovation within the DAM industry as a whole.

If you are considering acquiring a DAM in the near future and have digital preservation requirements, we are excited to discuss the possibilities with you. AVP is here to assist you in exploring your options and finding the ideal system for your organization. We eagerly await the opportunity to assist you on this journey.

The Importance of Choosing the Right Digital Asset Management System

5 July 2023

As organizations grow and their workflows evolve, so does their need for the right technology. But identifying which tools will meet your needs now — and as your business scales — can be a major undertaking.

Investing in a digital asset management (DAM) solution is no different. While DAM systems are designed to simplify how digital content is organized and managed, selecting the right solution can actually be really complicated. After all, there are dozens of vendors to choose from, all with a unique combination of functionality, features, and services. On the flip side, being able to identify and prioritize your business requirements requires a lot of due diligence.

And unfortunately, if you select a solution that doesn’t meet your needs there are a range of significant consequences. Let’s take a look at the risks entailed in making the wrong- DAM software investment — and how to avoid them.

The Risks of Getting it Wrong

Unwanted Expenses

By the time you realize that you’ve selected a a digital asset management solution or system that won’t support your use cases as expected, you will likely be deep into software implementation. This includes configuration, content migration, piloting, and possibly even the beginning of system launch. Many stakeholders will have committed significant time to this initiative.

At this point, it is pretty hard to cut your losses and change course. Not only will there be the hard costs of ending the current contract — but there will be further hefty staffing expenses. Scrapping plan A means starting from scratch with another procurement process and then spending months configuring, migrating, and preparing for roll out — a second time. We all know that time means money, and this redundant work will be costly.

It is not easy to let go of those sunk costs, so most likely, you will continue to persevere. You may not be able to tell the difference between poor implementation, and the wrong system. Either way the challenges will continue to grow in significance and complexity.

Broken Trust

While the technical part of a software launch can be complicated, with numerous timelines and milestones, getting people to embrace the new system can be even more challenging. After all, change is hard — even when it’s for the better. And when the solution doesn’t meet expectations, you risk damaging the trust between you and your stakeholders.

And once this trust is broken, it is difficult to repair. Stakeholders that feel burned or frustrated may not be interested in engaging in the process again, which can have a chilling effect on system adoption and even create a self-fulfilling prophecy that the project is doomed to failure.

Lost Opportunity

In addition to unwanted staffing expenses and damaged trust, investing in the wrong digital asset management system will delay your time to value. In other words, it extends the time needed to realize all of the gains that you were hoping for when you invested in a digital asset management system.

While delays and pivots play out, all of the original challenges that were drivers for making this technology investment continue to grow, such as workflow efficiencies, poor user experience, brand inconsistencies, and general content chaos. For organizations that manage archival assets, every month can bring the permanent loss of materials due to decay or obsolescence.

Not choosing the right DAM system means that these challenges continue to balloon — greatly prolonging the time until you realize DAM ROI.

Project Viability

A final risk inherent in choosing the wrong digital asset management system is the possibility that it sinks the project entirely. The decision to implement a new DAM system is often part of a larger technology strategy endorsed by executive leadership. And if the initial selection is a failure, it can create waves that cast doubt on the value of the investment.

This loss of confidence can threaten the existence of the entire DAM project — putting careers at risk and leaving a legacy that is difficult to overcome.

How to Choose the Right Digital Asset Management System, the First Time

Clearly, with any major technology investment the stakes are high. And righting the ship after a wrong decision entails considerable work and expense.

That’s why many organizations wondering how to choose a digital asset management system turn to a DAM consultant to guide their selection process. Including a consultant on your team can add clarity and efficiency at every stage of the process and sets the project up for success: from identifying specification requirements and drafting a request for proposal (RFP) all the way through vendor evaluation.

In addition to avoiding the risks outlined above, the benefits of working with a top digital asset management systems consultant include: